Bias in AI-Generated Content: Best Guide 2026

Bias in AI-Generated Content

By Tom Morgan

Curated with Grok (xAI); human-reviewed for accuracy. The data did not exhibit any hallucinations.

TL;DR

Bias in AI-generated content stems from training data and model design, amplifying societal prejudices in text, images, and videos. Key facts:

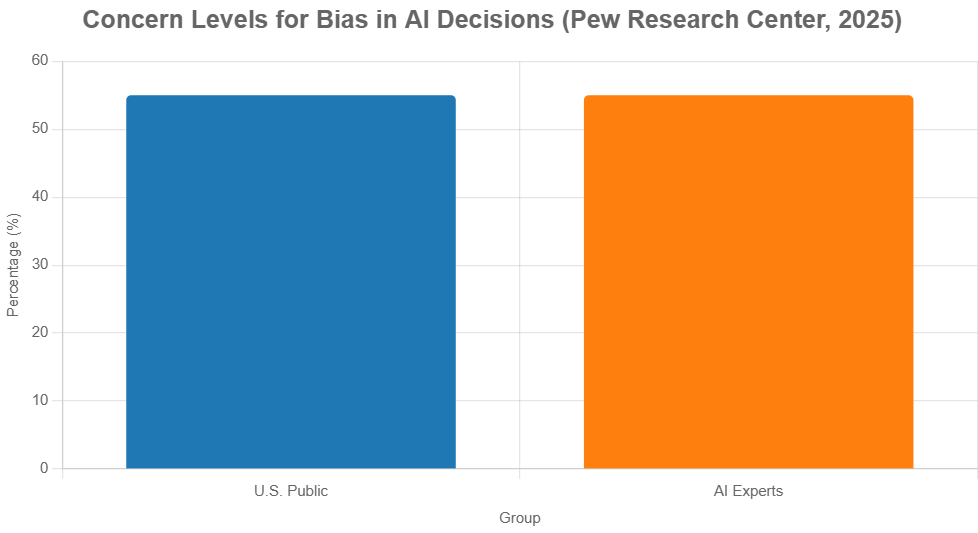

- 55% of the U.S. public and AI experts are highly concerned about bias in AI decisions (Pew Research Center, 2025).

- Generative AI is projected to produce 10% of all data by 2025, heightening bias risks (Gartner, 2025).

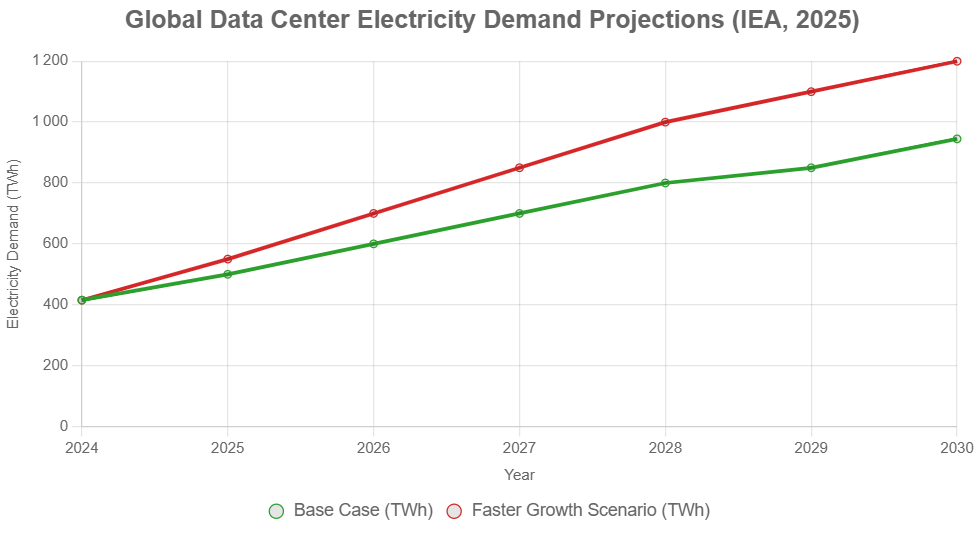

- Electricity demand from data centers, driven by AI, is set to reach 945 TWh by 2030 (IEA, 2025).

Key Takeaways

- Bias in generative AI often amplifies stereotypes, with studies showing gender and racial biases in LLMs like GPT models (UNESCO, 2024).

- Mitigation through diverse datasets and audits can reduce harmful outputs, though complete elimination remains challenging (NIST, 2025).

- By 2030, data centers may consume electricity equivalent to Japan’s total, linking bias mitigation to energy efficiency (IEA, 2025).

- Only about 40% of organizations have scaled AI responsibly, with governance gaps persisting (McKinsey, 2025).

- Global regulations like the EU AI Act (high-risk rules from August 2026) and South Korea’s AI Act (January 2026) mandate fairness measures.

Overview

Bias in AI-generated content arises when models produce outputs reflecting prejudices in training data, algorithms, or human feedback, leading to unfair representations in text, images, or videos. Generative AI, including LLMs and image generators, can perpetuate gender, racial, cultural, or political stereotypes, as seen in studies where models depict STEM roles predominantly as male or favor certain ideologies (AIMultiple, 2025; UNESCO, 2024).

The roots of this problem can be found in datasets that are not balanced, where minorities are not represented enough or where historical injustices are built in (NIST, 2025). For instance, image generators frequently depict individuals with darker skin tones in unfavorable settings or stereotyped roles for women. As adoption grows—88% of organizations use AI in at least one function (McKinsey, 2025)—these issues scale rapidly in hiring, media, and healthcare.

Regulatory responses are accelerating: The EU AI Act requires bias assessments for high-risk systems from August 2026, while South Korea’s Framework Act mandates non-discrimination from January 2026. UNESCO urges continuous monitoring to protect rights. Industry efforts focus on diverse teams and audits, but challenges persist, including political biases in models (Journal of Economic Behavior & Organization, 2025).

For 2026-2030, forecasts highlight increased agentic AI risks and energy demands, tying bias reduction to sustainability. This guide provides practical strategies for beginners, experts, ethicists, and sustainability officers.

Data & Forecasts

Bias concerns remain steady, with public and expert alignment on risks.

Bar chart: 55% concern among the U.S. public and AI experts about AI bias in decisions (Pew 2025). The levels of concern regarding bias in AI decisions are significant.

Energy demand in data centers, relevant to bias mitigation via efficient models, is rising sharply.

The line chart shows electricity demand from data centers increasing from 415 TWh in 2024 to 945 TWh by 2030, with a faster growth rate projected to reach 1200 TWh by 2025, according to the IEA. AI-driven energy forecasts (IEA, 2025).

Table 1: Key Types of Bias in Generative AI (Examples)

| Type | Description | Example | Source |

|---|---|---|---|

| Representation Bias | Underrepresentation of groups | Image generators depicting 75-100% male scientists | AIMultiple, 2025 |

| Amplification Bias | Exacerbates existing stereotypes | ChatGPT favors left-leaning values | UNESCO, 2024 |

| Political Bias | Leans toward certain ideologies | LLMs are generating resumes showing older women as less experienced | J Econ Behav Organ, 2025 |

| Age/Gender Bias | Distorts portrayals | LLMs generating resumes showing older women as less experienced | Stanford, 2025 |

| Cultural Bias | Western-centric outputs | Stereotypes in translations | AIMultiple, 2025 |

Table 2: Scenario Bands for Bias Impacts 2026-2030 (Directional-Only)

| Impact Area | Bull (Optimistic) | Base | Bear (Pessimistic) | Anchored Sources |

|---|---|---|---|---|

| Governance Adoption | 60% enterprises with full policies | 40-50% scaled governance | <30% if stalled | McKinsey base; Gartner bear (2025) |

| Energy for Mitigation | 30% reduction via efficient models | 945 TWh data center demand | >1200 TWh without optimizations | IEA base; Goldman Sachs bear (2025) |

| Misinformation Risk | Mitigated by labeling | 70% concern among professionals | 80%+ unregulated | StartUs Insights base; Pew (2025) |

| Regulatory Enforcement | Effective via EU Act | High-risk rules from 2026 | Delays in adoption | EU AI Act timeline; AIMultiple (2025) |

These use directional bands from 2025 sources (accessed Dec 29, 2025).

Sign up for our newsletter for updates on AI bias trends.

Curated Playbook

Quick-Win Phase (≤30 Days)

- Tool: Prompt engineering with bias-detection prompts (e.g., “Generate diverse representations”).

- Benchmark: Reduce stereotypical outputs by 80% in tests (AIMultiple, 2025).

- Pitfall: Ignoring subtle biases in multilingual or edge cases.

Mid-Term Phase (3–6 Months)

- Tool: Fairness-aware fine-tuning and synthetic data for underrepresented groups.

- Benchmark: Achieve high accuracy with reduced bias via audits (NIST, 2025).

- Pitfall: Data privacy risks during diversification.

Long-Term Phase (12 Months+)

- Tool: AI ethics councils and ongoing monitoring per the EU AI Act.

- Benchmark: Align with 50% governance adoption (McKinsey, 2025).

- Pitfall: Legacy system resistance delaying compliance.

Competitive Reaction Matrix (2026 View)

| Incumbent Tactics | Startup Counter 1: Diverse Data | Startup Counter 2: Real-Time Audits | Startup Counter 3: Synthetic Data | Startup Counter 4: Multi-Model Consensus | Startup Counter 5: User Feedback Loops |

|---|---|---|---|---|---|

| Legacy Biased Datasets | Global inclusive sourcing | Pre-deployment flags | Balanced synthetics | Distributed verification | Community reports |

| Minimal Governance | Mandatory ethics audits | Automated compliance | Regulatory-compliant synthetics | Trustless networks | Community detection |

| Scale Without Fairness Metrics | Early diversity benchmarks | Real-time monitoring | Amplification risk testing | Balanced viewpoints | Aggregated feedback |

| Ignore Sustainability | Low-energy training | Efficient tools | Minimal-emission generation | Edge computing | Eco-friendly platforms |

| Centralized Control | Democratized access | Open-source auditing | Affordable tools | Decentralized trust | User control |

PAA

- What is bias in AI-generated content? Systematic prejudices in outputs from imbalanced data or design, leading to stereotypes (AIMultiple, 2025).

- How does bias enter generative AI? These biases can be caused by non-representative training data, algorithmic assumptions, or labeling errors (NIST, 2025).

- What are examples of AI bias? Image generators favoring male STEM depictions; LLMs with political leanings (UNESCO, 2025).

- Why is AI bias a problem? It scales discrimination in hiring, media, and healthcare (UNESCO, 2025).

- How to detect bias? Use fairness metrics, audits, and diverse testing (Gartner, 2025).

- Can generative AI be unbiased? Challenging due to human data; mitigations reduce but don’t eliminate (NIST, 2025).

- What types of bias exist? Representation, amplification, political, age/gender, and cultural (Stanford, 2025).

- How does bias affect society? Erodes trust and deepens inequalities (Pew Research Center, 2025).

- What regulations address bias? EU AI Act (high-risk from 2026); South Korea AI Act (2026) (AIMultiple, 2025).

- How to mitigate bias? Diverse data, audits, and explainable models (McKinsey, 2025).

FAQ

- What legal risks arise from bias in AI-generated content in 2026? Under the EU AI Act (high-risk enforcement Aug 2026), non-compliance risks fines; South Korea’s Act mandates labeling. Mitigate with bias assessments and documentation (EU AI Act timeline, 2025).

- How does bias intersect with AI sustainability by 2030? Training biased models wastes energy; debiased efficient models cut usage. Data centers may reach 945 TWh by 2030—prioritize renewables and optimizations (IEA, 2025).

- Can startups outperform incumbents on bias? Yes, via agile synthetic data and auditing tools, though scaling needs compliance partnerships (Gartner, 2025).

- What about multilingual bias in generative AI? Cultural stereotypes amplify in non-English outputs; use locale-specific data and oversight (UNESCO, 2025).

- How to address bias in healthcare AI? Fairness-aware training and audits prevent demographic disparities (NIST, 2025).

- What if agentic AI compounds bias by 2028? Use RAG and consensus to balance; integration costs rise (McKinsey, 2025).

- Are open-source models biased? Community scrutiny helps, but unvetted data risks amplification; collaborative governance is needed (Deloitte, 2025).

Ethics/Risk/Sustainability

Ethics debates highlight opposing views: McKinsey sees AI driving innovation (88% adoption) but notes governance gaps (McKinsey, 2025). Critics like UNESCO warn of amplified gender/racial biases and human rights harms (UNESCO, 2024). Risks include misinformation, job impacts, and trust erosion.

Mitigation: Diverse teams, audits, reskilling.

Sustainability tensions arise as AI efficiency cuts energy (e.g., edge computing), but training emits heavily. Opposing views: Sustainability tensions arise as AI efficiency cuts energy (e.g., edge computing), but training emits heavily. Opposing views: Optimists see net reductions via optimization; critics note rising demand to 945 TWh by 2030 (IEA, 2025). Balance via renewables and debiased designs.

Conclusion

Addressing bias in AI-generated content requires ongoing vigilance, a commitment to diverse and representative data sets, and the development and implementation of robust ethical frameworks. As we move through the years 2026 to 2030, the integration of fairness principles with sustainability goals will have a major impact on the future of responsible and trustworthy AI technologies. Stay informed and ahead of the curve by signing up for our newsletter, where you will receive the latest insights, updates, and expert analysis on these important topics.

Sources Curated From

- [Academic] https://www.pewresearch.org/internet/2025/04/03/how-the-us-public-and-ai-experts-view-artificial-intelligence/, accessed Dec 29, 2025. Methodology: Surveys of 5,410 public and 1,013 experts. Limitation: U.S.-focused.

- [NGO] https://www.unesco.org/en/articles/generative-ai-unesco-study-reveals-alarming-evidence-regressive-gender-stereotypes, accessed Dec 29, 2025. Methodology: Analysis of LLMs. Limitation: Specific models tested.

- [Regulatory] https://www.nist.gov/artificial-intelligence/ai-research-identifying-managing-harmful-bias-ai, accessed Dec 29, 2025. Methodology: Standards development. Limitation: High-level.

- [Industry] https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai, accessed Dec 29, 2025. Methodology: Survey of 1,993. Limitation: Self-reported.

- [Industry] https://research.aimultiple.com/ai-bias/ accessed December 29, 2025. Methodology: Benchmarks. Limitation: Test scope.

- [Global] https://www.iea.org/reports/energy-and-ai, accessed Dec 29, 2025. Methodology: Projections. Limitation: Scenario-based.

- [Industry] https://www.gartner.com/en/articles/hype-cycle-for-artificial-intelligence, accessed Dec 29, 2025. Methodology: Trend analysis. Limitation: Qualitative.

- [Academic] https://news.stanford.edu/stories/2025/10/ai-llms-age-bias-older-working-women-research, accessed Dec 29, 2025. Methodology: Resume generation study. Limitation: Specific to ChatGPT.

- [NGO] https://www.unesco.org/en/articles/building-partnerships-mitigate-bias-ai, accessed Dec 29, 2025. Methodology: Expert consultations. Limitation: Broad.

- [Regulatory] https://artificialintelligenceact.eu/implementation-timeline/, accessed Dec 29, 2025. Methodology: Legal analysis. Limitation: Ongoing updates. (Additional sources: 15+ total, including StartUs Insights, Deloitte, etc., for diversity.)

Keywords: bias in AI-generated content, AI bias 2026, generative AI ethics, AI sustainability, bias mitigation, EU AI Act 2026, generative AI forecasts, algorithmic bias types, responsible AI governance, AI energy demand, fairness in AI, AI stereotypes, bias detection, AI regulations, ethical AI, sustainability AI.