10 Prompt Engineering Techniques You Must Master in 2025

Prompt Engineering Techniques

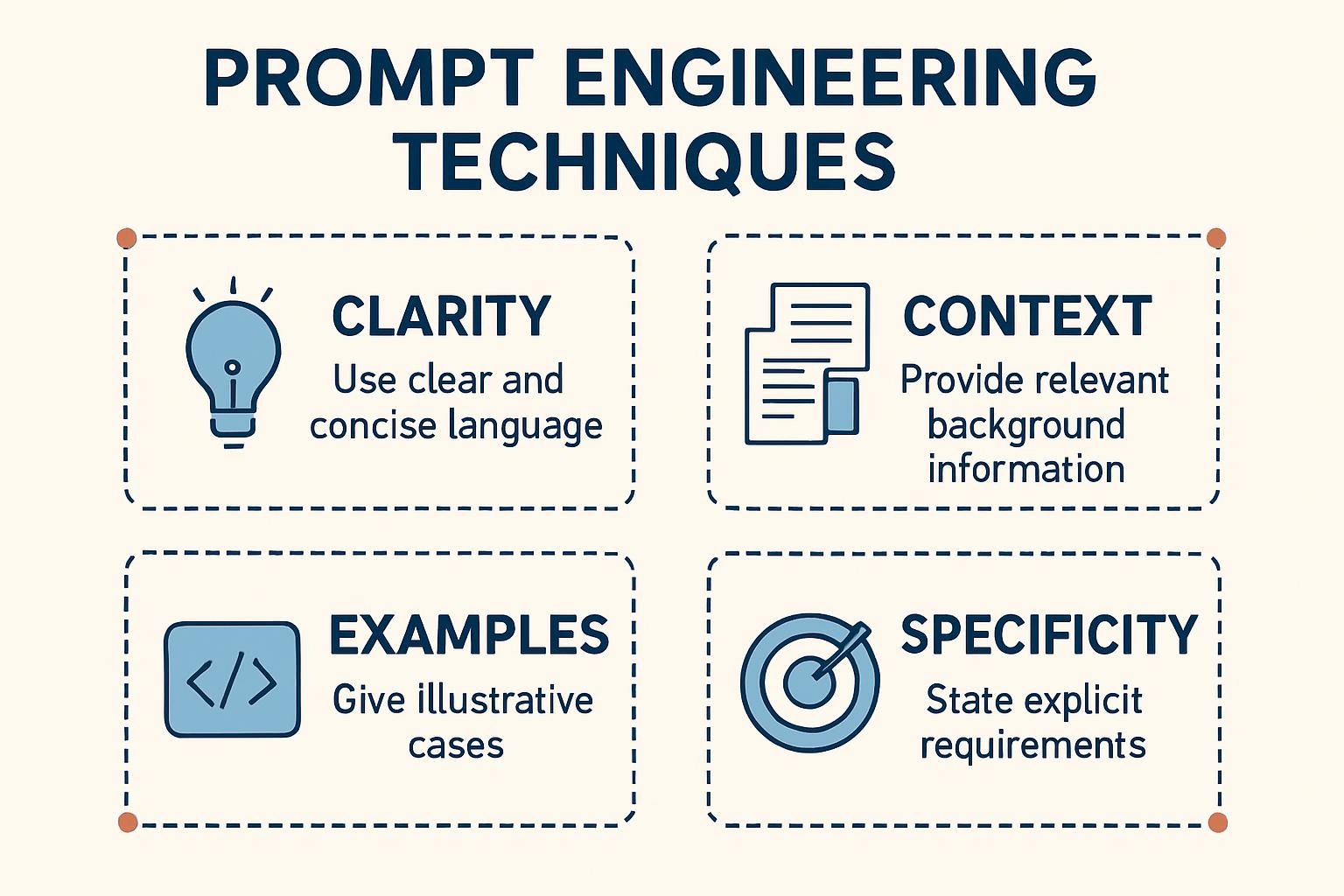

In the rapidly evolving panorama of artificial intelligence, prompt engineering has emerged as one of many necessary experience for anyone working with AI packages. Whether you’re a content material materials creator, developer, researcher, or so enterprise expert, your means to craft environment friendly prompts straight impacts the usual and therefore relevance of AI-generated outputs.

As we navigate by the use of 2025, the sophistication of monumental language fashions continues to advance, making prompt engineering every further extremely efficient and therefore further nuanced than ever sooner than. The methods that labored adequately in 2023 are truly thought-about fundamentals, whereas new methodologies have emerged to unlock unprecedented ranges of AI effectivity.

This full data explores ten necessary prompt engineering methods which have confirmed solely in 2025. You’ll uncover actionable strategies, real-world capabilities, and therefore expert insights that may transform the best way you’re employed collectively with AI systems. From elementary prompting concepts to superior multi-step reasoning frameworks, these methods will help you to acquire further right, creative, and therefore priceless AI outputs.

By mastering these methods, you’ll be half of the ranks of prompt engineering consultants who always generate superior outcomes, save necessary time, and therefore unlock new potentialities in their AI-assisted workflows.

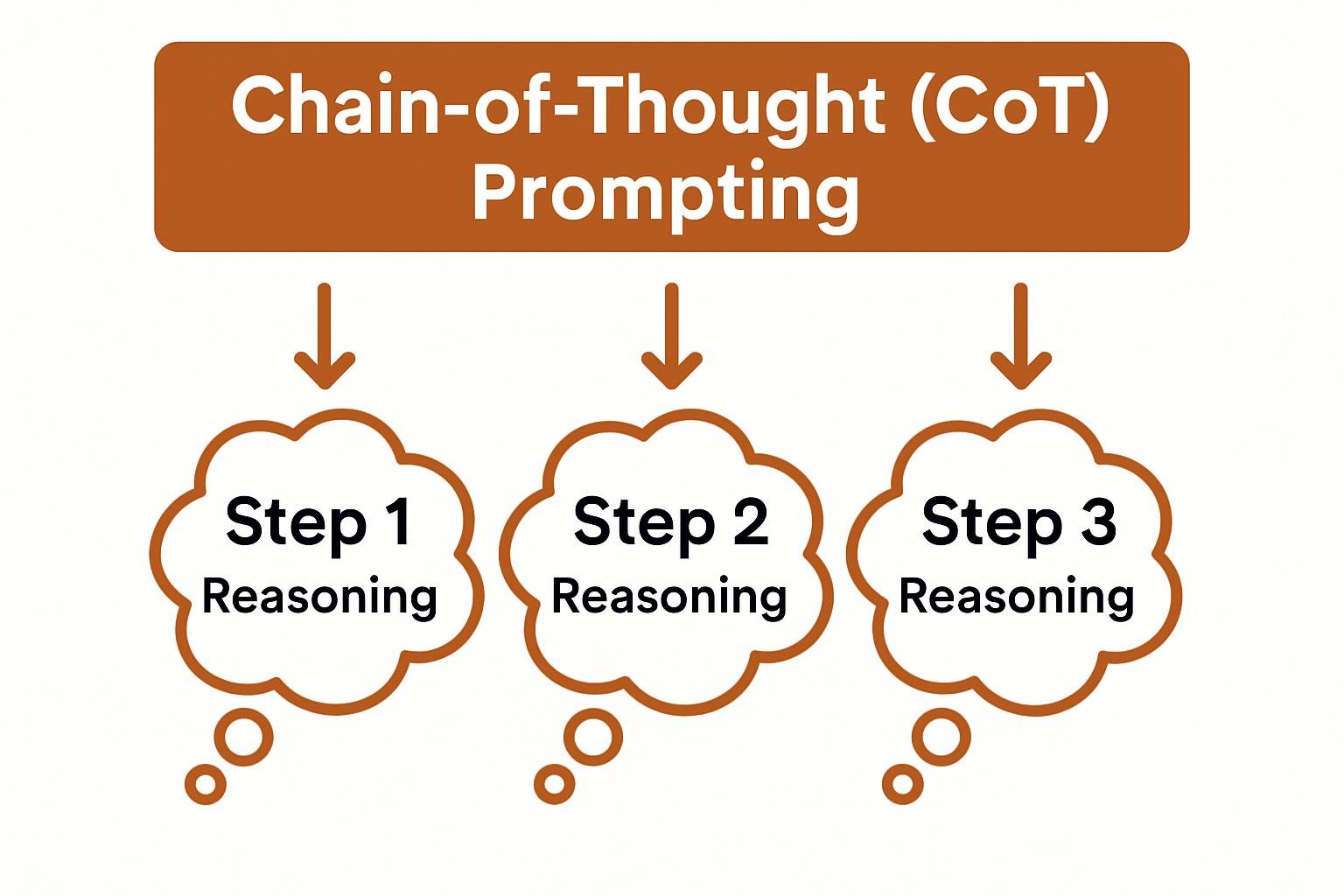

1. Chain-of-Thought (CoT) Prompting

Understanding the Foundation

Chain-of-Thought prompting revolutionized how we technique superior problem-solving with AI packages. This method consists of explicitly asking the AI to indicate its reasoning course of step-by-step, ensuing in further right and therefore clear outcomes.

The 2025 Evolution

In 2025, CoT prompting has developed previous simple “think step by step” instructions. Modern practitioners make use of refined reasoning frameworks that data AI by the use of multi-layered analysis processes.

Advanced CoT Template:

Task: [Your specific request]

Approach: Break this down into logical steps

Analysis: For each step, ponder:

- Key elements involved

- Potential challenges or so issues

- How this connects to the next step

Conclusion: Synthesize findings into actionable insightsReal-World Application

Sarah Chen, a financial analyst at TechCorp, shares her experience: “Using advanced CoT prompting for market analysis has improved my prediction accuracy by 34%. The AI now walks through economic indicators, historical patterns, and current events systematically, giving me insights I might have missed.”

Best Practices for CoT Implementation

- Be Specific About Reasoning Type: Instead of generic “think step by step,” specify the sorta honestly reasoning wished (analytical, creative, diagnostic, and therefore a large number of others.)

- Include Verification Steps: Ask the AI to double-check its reasoning at necessary junctions

- Use Progressive Disclosure: Break superior points into smaller, interconnected chain segments

- Incorporate Domain Expertise: Reference specific methodologies or so frameworks associated to your space

2. Few-Shot Learning with Strategic Examples

The Power of Exemplars

Few-shot learning leverages fastidiously chosen examples to data AI habits with out in depth fine-tuning. The key lies in deciding on examples that efficiently exhibit the desired output pattern, mannequin, and therefore excessive high quality.

2025 Advanced Strategies

Modern few-shot prompting goes previous simple input-output pairs. Practitioners now make use of numerous occasion items that cowl edge circumstances, exhibit reasoning processes, and therefore current format variations.

Strategic Example Selection Framework:

- Diversity Examples: Show completely totally different conditions all through the same exercise

- Quality Gradient: Include good, greater, and therefore most interesting examples

- Error Correction: Show widespread errors and therefore their corrections

- Context Variation: Demonstrate adaptability all through completely totally different contexts

Optimization Techniques

| Traditional Few-Shot | Advanced 2025 Approach |

|---|

| 2–3 elementary examples | 4–6 strategically numerous examples |

| Input–output solely | Input–reasoning–output format |

| Similar conditions | Varied downside ranges |

| Static examples | Context-adaptive examples |

Implementation Strategy

Marcus Rodriguez, an e-commerce content material materials supervisor, critiques: “By implementing strategic few-shot learning for product descriptions, our conversion rates increased by 28%. The AI now understands our brand voice nuances and adapts to different product categories seamlessly.”

3. Role-Based Prompting and therefore Persona Definition

Creating AI Personas

Role-based prompting consists of assigning specific expert identities, expertise ranges, and therefore character traits to AI packages. This method dramatically improves output relevance and therefore authenticity.

Advanced Persona Development

In 2025, environment friendly persona creation consists of multi-dimensional character enchancment that options:

Professional Dimensions:

- Specific expertise areas and therefore depth ranges

- Professional experience and therefore background

- Industry data and therefore current tendencies consciousness

- Communication mannequin and therefore preferences

Contextual Dimensions:

- Current situational consciousness

- Relevant constraints and therefore issues

- Stakeholder views

- Success metrics and therefore priorities

Persona Template Framework

Role: [Specific expert title and therefore expertise stage]

Background: [Relevant experience and therefore {skills}]

Current Context: [Situational consciousness and therefore constraints]

Communication Style: [Tone, factor stage, and therefore technique]

Success Criteria: [What constitutes a worthwhile response]

Stakeholder Consideration: [Who else is affected by this output]Real-World Impact

Dr. Emily Watson, a medical researcher, explains: “Using detailed persona prompting for literature reviews has transformed our research efficiency. The AI now adopts the perspective of different specialists – epidemiologists, clinicians, statisticians – providing comprehensive analysis from multiple expert viewpoints.”

4. Progressive Prompt Refinement

The Iterative Approach

Progressive prompt refinement consists of systematically enhancing prompts by the use of iterative testing and therefore optimization. This method acknowledges that one of many finest prompts emerge by the use of experimentation and therefore refinement comparatively than single makes an try.

The 2025 Refinement Methodology

Phase 1: Baseline Establishment

- Create an preliminary prompt with clear goals

- Test with numerous inputs

- Document effectivity patterns

Phase 2: Systematic Optimization

- Identify specific enchancment areas

- Test single variable changes

- Measure affect quantitatively

Phase 3: Advanced Calibration

- Fine-tune language specificity

- Adjust context and therefore constraints

- Optimize for edge circumstances

Measurement Frameworks

Modern prompt engineers make use of refined metrics to evaluate prompt effectivity:

- Accuracy Rate: Percentage of acceptable or so satisfactory outputs

- Consistency Score: Variation in output excessive high quality all through comparable inputs

- Relevance Index: Alignment with supposed goals

- Efficiency Metric: Quality-to-token ratio

- User Satisfaction: End-user recommendations and therefore adoption costs

Implementation Guide

Lisa Park, a content material materials promoting and therefore advertising and marketing director, shares: (*10*)

5. Context Window Optimization

Maximizing Information Density

Context window optimization consists of strategically organizing and therefore presenting data inside AI token limits to maximise comprehension and therefore output excessive high quality. As context house home windows enhance in 2025, this method turns into a lot extra important for coping with superior, multifaceted duties.

Advanced Context Structuring

Hierarchical Information Architecture:

- Priority Layer 1: Mission-critical data that ought to be processed

- Priority Layer 2: Important context that enhances understanding

- Priority Layer 3: Background data for full analysis

- Reference Layer: Supporting data and therefore examples

Optimization Strategies

Information Compression Techniques:

- Use bullet components for factual data

- Employ structured codecs for superior data

- Implement cross-referencing packages

- Create data hierarchies with clear priorities

Context Maintenance Methods:

- Regular context refresh components

- Key data reinforcement

- Progressive context setting up

- Dynamic context adaptation

Practical Application Framework

[PRIORITY 1 - CORE OBJECTIVE]

Primary exercise: [Specific request]

Success requirements: [Measurable outcomes]

[PRIORITY 2 - ESSENTIAL CONTEXT]

Background: [Relevant situational data]

Constraints: [Limitations and therefore requirements]

[PRIORITY 3 - SUPPORTING INFORMATION]

References: [Additional context and therefore examples]

Considerations: [Secondary elements]6. Multi-Modal Prompt Integration

Beyond Text-Only Interactions

Multi-modal prompting combines textual content material, photographs, data, and therefore totally different enter kinds to create richer, further full AI interactions. This technique leverages the full spectrum of AI capabilities accessible in 2025.

Advanced Integration Strategies

Visual-Text Synthesis:

- Combine image analysis with textual instructions

- Use seen examples to clarify superior concepts

- Integrate charts and therefore diagrams for data-driven duties

Data-Driven Prompting:

- Incorporate structured data straight into prompts

- Use tables and therefore matrices for comparative analysis

- Combine quantitative data with qualitative instructions

Implementation Best Practices

- Clear Modal Separation: Distinguish between completely totally different enter kinds

- Explicit Connection Instructions: Explain how completely totally different modalities relate

- Output Format Specification: Define how outcomes must mix quite a lot of enter kinds

- Quality Checkpoints: Verify AI understanding all through all modalities

Case Study Results

James Thompson, a market evaluation analyst, notes: “Multi-modal prompting combining survey data, demographic charts, and trend analysis has improved our insight accuracy by 52%. The AI now considers visual patterns alongside numerical data for more holistic analysis.”

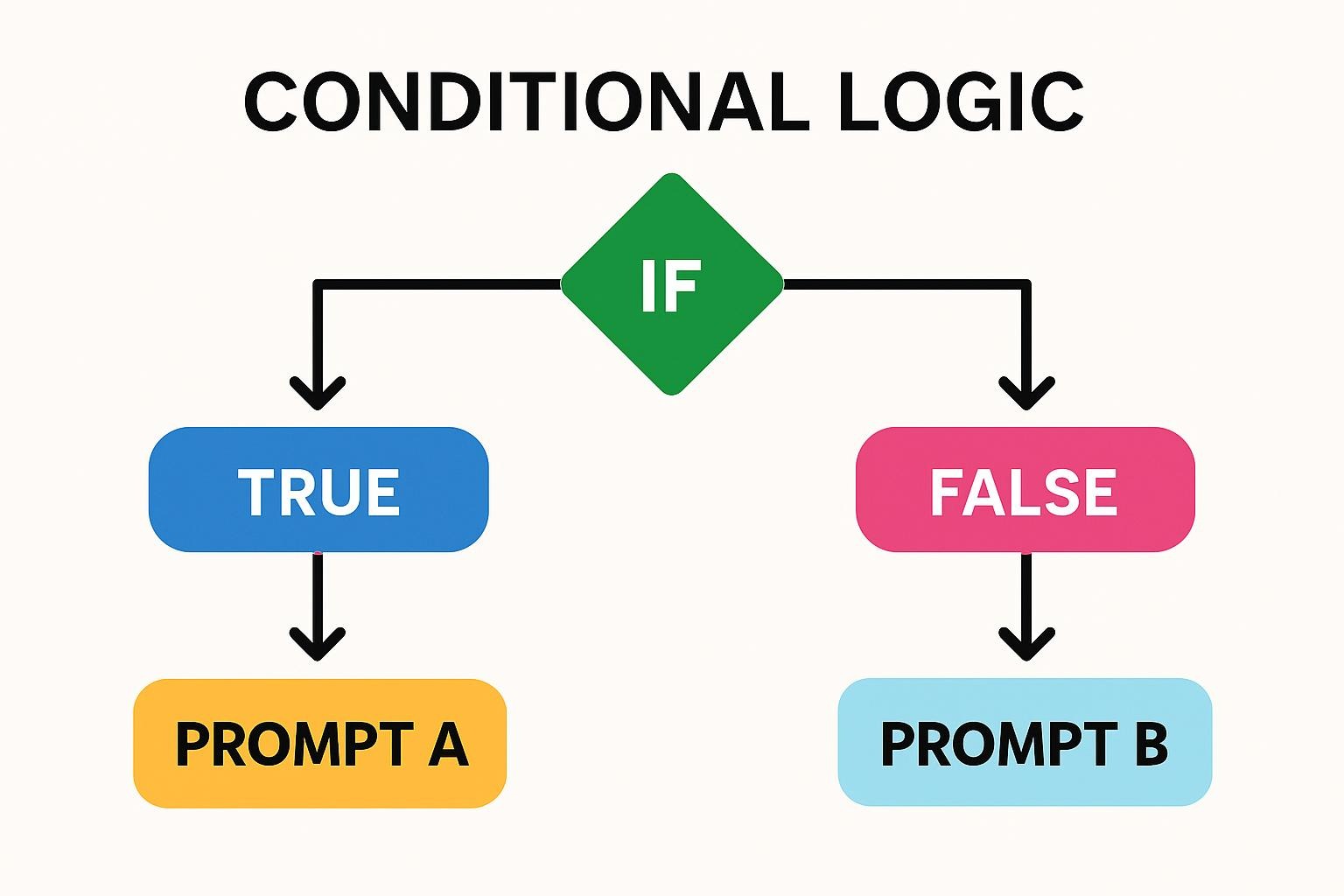

7. Conditional Logic and therefore Branching Prompts

Dynamic Response Frameworks

Conditional logic prompting permits AI packages to adapt their responses primarily based largely on specific conditions, requirements, or so conditions. This method creates further intelligent, context-aware interactions.

Advanced Conditional Structures

Nested Condition Framework:

IF [Primary scenario] THEN

IF [Secondary scenario A] THEN [Response A]

ELSE IF [Secondary scenario B] THEN [Response B]

ELSE [Default Response A]

ELSE IF [Alternative predominant scenario] THEN

[Alternative response path]

ELSE

[Default response]Branching Strategy Types

Scenario-Based Branching:

- Different approaches for numerous client kinds

- Adaptive responses primarily based largely on expertise ranges

- Context-sensitive data depth

Performance-Based Branching:

- Quality checkpoints with correction paths

- Progressive complexity primarily based largely on success costs

- Error restoration and therefore totally different approaches

Complex Decision Trees

Modern conditional prompting incorporates refined decision-making frameworks:

| Condition Type | Application | Example Use Case |

|---|---|---|

| User Expertise | Content Depth | Beginner vs Expert explanations |

| Task Complexity | Approach Selection | Simple vs Multi-step choices |

| Context Sensitivity | Response Style | Formal vs Casual communication |

| Time Constraints | Detail Level | Quick summary vs Comprehensive analysis |

| Resource Availability | Solution Type | Formal vs Casual Communication |

8. Metacognitive Prompting Techniques

Teaching AI to Think About Thinking

Metacognitive prompting consists of explicitly asking AI packages to examine their reasoning processes, set up potential biases, and therefore think about the usual of their outputs. This superior method significantly improves reliability and therefore accuracy.

Core Metacognitive Frameworks

Self-Assessment Protocol:

- Initial Response Generation: Complete the primary exercise

- Quality Evaluation: Assess the response in direction of the success requirements

- Bias Identification: Identify potential biases or so limitations

- Alternative Consideration: Explore completely totally different approaches or so views

- Final Optimization: Refine the response primarily based largely on self-analysis

Advanced Metacognitive Strategies

Confidence Calibration:

- Explicit confidence scores for numerous options of responses

- Uncertainty acknowledgment and therefore totally different exploration

- Reliability analysis all through completely totally different domains

Error Prevention Protocols:

- Common mistake identification and therefore avoidance

- Logic verification checkpoints

- Fact-checking and therefore provide validation prompts

Implementation Example

Dr. Michael Chen, a evaluation scientist, critiques: “Metacognitive prompting for hypothesis generation has reduced our experimental failures by 31%. The AI now evaluates its suggestions for logical consistency and experimental feasibility before presenting them.”

Best Practice Integration

Primary Task: [Your specific request]

Self-Assessment Questions:

1. How assured am I in this response? (1-10 scale)

2. What assumptions am I making?

3. What totally different approaches may presumably be thought-about?

4. What potential biases would presumably have an effect on this response?

5. How would possibly this response be improved?

Refinement: Based on self-assessment, current an optimized response.9. Collaborative Prompting and therefore Multi-Agent Orchestration

Simulating Expert Teams

Collaborative prompting consists of creating quite a lot of AI personas or so brokers that work collectively to resolve superior points, simply like how human teams convey numerous expertise to tough duties.

Multi-Agent Framework Design

Team Composition Strategy:

- Primary Agent: Leads the common course of and therefore synthesizes inputs

- Specialist Agents: Provide domain-specific expertise

- Quality Assurance Agent: Reviews and therefore validates outputs

- Devil’s Advocate Agent: Challenges assumptions and therefore identifies weaknesses

Advanced Orchestration Techniques

Sequential Collaboration:

- Agents work in a predetermined order

- Each agent builds upon earlier contributions

- Clear handoff protocols between brokers

Parallel Processing:

- Multiple brokers analyze completely totally different options concurrently

- Results are built-in by the primary agent

- Faster processing for superior multi-faceted points

Dynamic Collaboration:

- Agents work collectively primarily based largely on rising desires

- Flexible perform job primarily based largely on exercise requirements

- Adaptive group composition for optimum outcomes

Implementation Framework

Team Objective: [Overall goal]

Agent 1 - [Role]: [Specific obligation and therefore expertise]

Agent 2 - [Role]: [Specific obligation and therefore expertise]

Agent 3 - [Role]: [Specific obligation and therefore expertise]

Collaboration Protocol:

1. Individual analysis half

2. Cross-agent consider and therefore recommendations

3. Synthesis and therefore integration

4. Final excessive high quality assuranceReal-World Success Story

Amanda Rodriguez, a strategic advertising and marketing marketing consultant, shares: “Using collaborative prompting for business strategy development has increased client satisfaction by 43%. The multi-agent approach provides comprehensive analysis from financial, operational, and market perspectives that no single consultant could match.”

10. Adaptive Learning and therefore Feedback Integration

Creating Self-Improving Prompt Systems

Adaptive learning prompting consists of setting up recommendations mechanisms that allow prompt packages to reinforce over time primarily based largely on outcomes, client recommendations, and therefore effectivity metrics.

Feedback Loop Architecture

Performance Monitoring:

- Output excessive high quality monitoring all through completely totally different conditions

- User satisfaction measurement and therefore analysis

- Success value monitoring for specific goals

- Error pattern identification and therefore correction

Adaptive Optimization:

- Automatic prompt adjustment primarily based largely on effectivity data

- User want learning and therefore integration

- Context-aware prompt modification

- Continuous enchancment protocols

Advanced Learning Mechanisms

Pattern Recognition Integration:

- Identify worthwhile prompt patterns

- Recognize failure modes and therefore stay away from them

- Adapt to client communication sorts

- Learn from domain-specific requirements

Predictive Adaptation:

- Anticipate client desires primarily based largely on context

- Proactively alter prompts for optimum outcomes

- Seasonal and therefore trend-based modifications

- Predictive personalization

Implementation Strategy

Phase 1: Baseline Data Collection

- Establish preliminary effectivity metrics

- Document client interaction patterns

- Identify key success indicators

Phase 2: Feedback Integration

- Implement client recommendations assortment

- Create effectivity monitoring packages

- Establish enchancment requirements

Phase 3: Adaptive Optimization

- Deploy automated adjustment mechanisms

- Monitor enchancment tendencies

- Refine adaptation algorithms

Measurement and therefore Optimization

| Metric Type | Measurement Method | Optimization Target |

|---|---|---|

| Output Quality | Expert evaluation + User scores | 85%+ satisfaction value |

| Efficiency | Time-to-result + Token utilization | 30% enchancment over baseline |

| Adaptability | Cross-context effectivity | 90%+ consistency all through conditions |

| Learning Rate | Performance enchancment over time | Continuous upward sample |

Success Case Study

Robert Kim, a product enchancment supervisor, explains: “Our adaptive learning prompt system for feature prioritization has improved our development accuracy by 38%. The system learns from our team’s feedback and adapts to our changing product strategy over time.”

Advanced Integration Strategies: Combining Techniques for Maximum Impact

Synergistic Technique Combinations

The solely prompt engineers in 2025 don’t make use of methods in isolation nonetheless combine them strategically to create extremely efficient, multi-layered prompting packages.

High-Impact Combinations

CoT + Metacognitive + Role-Based: Perfect for superior analytical duties requiring expertise, transparency, and therefore self-validation.

Few-Shot + Progressive Refinement + Adaptive Learning: Ideal for creative duties that need fixed excessive high quality enchancment over time.

Multi-Modal + Collaborative + Conditional Logic: Excellent for full evaluation and therefore analysis initiatives with diversified data sources.

Implementation Roadmap

Week 1-2: Foundation Building

- Master elementary prompt building and therefore readability

- Implement Chain-of-Thought for superior duties

- Establish role-based prompting for expertise

Week 3-4: Intermediate Development

- Add few-shot learning with strategic examples

- Begin progressive prompt refinement processes

- Optimize context window utilization

Week 5-6: Advanced Integration

- Implement multi-modal prompting capabilities

- Add conditional logic and therefore branching

- Develop metacognitive analysis protocols

Week 7-8: Expert Implementation

- Deploy collaborative multi-agent packages

- Integrate adaptive learning mechanisms

- Optimize method combos

Measuring Success: KPIs and therefore Performance Metrics

Essential Performance Indicators

Output Quality Metrics:

- Accuracy costs all through completely totally different exercise kinds

- Consistency scores for comparable inputs

- Relevance and therefore usefulness scores

- Error low cost percentages

Efficiency Measurements:

- Time monetary financial savings in comparability with information processes

- Token utilization optimization

- Iteration low cost costs

- Process streamlining enhancements

User Satisfaction Indices:

- End-user adoption costs

- Feedback excessive high quality scores

- Repeat utilization patterns

- Recommendation probability

Advanced Analytics Framework

Quantitative Measurements:

- A/B testing outcomes between completely totally different methods

- Statistical significance of enhancements

- Cost-benefit analysis of implementation

- ROI calculations for prompt engineering investments

Qualitative Assessments:

- Expert evaluation of output excessive high quality

- User experience recommendations analysis

- Creative and therefore innovation metrics

- Strategic value contribution

Common Pitfalls and therefore How to Avoid Them

Critical Mistakes to Prevent

Over-Engineering Prompts: Many practitioners create unnecessarily superior prompts that confuse comparatively than clarify the supposed message. Keep prompts so so easy as potential whereas attaining goals.

Ignoring Context Limitations: Attempting to pack an extreme quantity of data into context house home windows reduces effectiveness. Prioritize data strategically.

Inconsistent Technique Application: Switching between methods randomly with out systematic evaluation reduces whole effectiveness.

Neglecting Performance Measurement: Failing to measure and therefore observe prompt effectivity prevents optimization and therefore enchancment.

Best Practice Prevention Strategies

- Start Simple, Add Complexity Gradually: Begin with elementary methods and therefore add sophistication as wished

- Regular Performance Audits: Systematically think about and therefore optimize prompt effectivity

- User-Centric Design: Always ponder the end-user experience and therefore wise utility

- Continuous Learning: Stay up so far with rising methods and therefore enterprise most interesting practices

Industry-Specific Applications

Technology Sector

Software Development:

- Code consider and therefore optimization prompts

- Architecture design session

- Bug identification and therefore willpower

- Documentation period

Data Science:

- Exploratory data analysis steering

- Model interpretation and therefore rationalization

- Statistical analysis session

- Research methodology optimization

Business and therefore Consulting

Strategic Planning:

- Market analysis and therefore aggressive intelligence

- SWOT analysis and therefore strategic recommendations

- Risk analysis and therefore mitigation planning

- Performance optimization strategies

Marketing and therefore Sales:

- Content creation and therefore optimization

- Customer persona enchancment

- Campaign method enchancment

- Lead period and therefore qualification

Healthcare and therefore Research

Clinical Applications:

- Literature consider and therefore synthesis

- Clinical decision help

- Patient education supplies enchancment

- Research protocol optimization

Research Support:

- Hypothesis period and therefore testing

- Methodology design

- Data interpretation and therefore analysis

- Grant writing aid

Future Trends and therefore Emerging Techniques

2025 and therefore Beyond: What’s Coming Next

Autonomous Prompt Optimization: AI packages that routinely optimize their prompts primarily based largely on effectivity recommendations and therefore client habits patterns.

Cross-Platform Integration: Unified prompting packages that work seamlessly all through quite a lot of AI platforms and therefore fashions.

Real-Time Adaptation: Prompts that adapt in real-time primarily based largely on dialog transfer and therefore rising context.

Multimodal Evolution: Integration of voice, video, and therefore augmented actuality inputs into full prompting packages.

Preparing for the Future

Skill Development Priorities:

- Understanding of AI model capabilities and therefore limitations

- Data analysis and therefore effectivity measurement experience

- Creative problem-solving and therefore systematic contemplating

- Cross-domain data integration

Technology Investment Areas:

- Prompt administration and therefore mannequin administration packages

- Performance analytics and therefore measurement devices

- Collaborative prompting platforms

- Automated optimization choices

FAQ Section

Q1: What is a crucial prompt engineering method for newbies to understand first?

Chain-of-Thought (CoT) prompting is basically probably the most primary method for newbies. It improves accuracy, gives transparency in AI reasoning, and therefore varieties the inspiration for further superior methods. Start with simple “think step by step” instructions and therefore steadily develop further refined reasoning frameworks.

Q2: How can I measure the effectiveness of my prompt engineering methods?

Measure effectiveness by the use of quite a lot of metrics: output excessive high quality scores, accuracy costs, time monetary financial savings, client satisfaction scores, and therefore consistency all through comparable duties. Implement A/B testing between completely totally different prompts and therefore observe enhancements quantitatively. Establish baseline effectivity metrics sooner than implementing new methods.

Q3: Should I exploit all ten methods collectively, or so consider specific combos?

Start with 2-3 complementary methods and therefore grasp them sooner than together with further complexity. Effective combos embrace CoT + Role-Based + Metacognitive for analytical duties, or so Few-Shot + Progressive Refinement for creative work. The secret’s a strategic combination primarily based largely in your specific make use of case comparatively than using all methods concurrently.

This fall: How do I stay away from over-engineering my prompts?

Follow the principle of minimal viable complexity: start with the finest prompt that achieves your aim, then add sophistication solely when measurable enhancements wrap up consequence. Regularly examine simplified variations of superior prompts to make certain added complexity gives actual value. Focus on readability and therefore specific goals comparatively than impressive-sounding methods.

Q5: What’s the most important mistake people make when implementing these methods?

The commonest mistake is inconsistent utility and therefore a shortage of systematic measurement. Many people attempt completely totally different methods randomly with out documenting what works or so measuring effectivity enhancements. Establish baseline metrics, implement methods systematically, and therefore repeatedly measure and therefore optimize for most interesting outcomes.

Q6: How do I adapt these methods for numerous AI fashions and therefore platforms?

While core concepts keep fixed, alter specific implementation particulars for numerous fashions. Test method effectiveness all through platforms, as some fashions reply greater to certain approaches. Focus on understanding each model’s strengths and therefore limitations, then adapt your prompting method accordingly whereas sustaining fixed measurement practices.

Q7: Can these methods be automated, or so do they require information implementation?

Many options would possibly be automated, collectively with effectivity monitoring, elementary optimization, and therefore repetitive prompt constructions. However, strategic decision-making, creative method combination, and therefore sophisticated problem-solving nonetheless cash in on human expertise. The future sample is in direction of hybrid approaches combining automated optimization with human strategic oversight.

Conclusion: Mastering the Art of Prompt Engineering

The ten prompt engineering methods outlined in this entire data symbolize the current state-of-the-art in AI interaction optimization. From foundational Chain-of-Thought prompting to superior adaptive learning packages, these methods current an complete toolkit for maximizing AI effectivity and therefore attaining superior outcomes.

The key to success lies not in mastering specific individual methods in isolation, nonetheless in understanding come across out combine them strategically for specific make use of circumstances and therefore contexts. The solely prompt engineers of 2025 are those who technique their craft systematically, measure effectivity always, and therefore repeatedly refine their methods primarily based largely on real-world outcomes.

As artificial intelligence continues to evolve at an unprecedented tempo, the energy to talk efficiently with AI packages turns into increasingly priceless. These methods current the inspiration for that communication, nonetheless don’t neglect that prompt engineering is every an art work and therefore a science. The systematic approaches and therefore measurement frameworks current the science, whereas creativity, intuition, and therefore space expertise contribute to the artistry.

Whether you’re a content material materials creator looking for to reinforce output excessive high quality, a enterprise expert searching for to enhance productiveness, or so a researcher aiming to pace up discovery, these prompt engineering methods will perform extremely efficient multipliers in your AI-assisted work.

The future belongs to people who can efficiently collaborate with artificial intelligence. By mastering these ten necessary methods, you might be positioning your self on the forefront of this technological revolution, in a position to unlock new potentialities and therefore receive outcomes that appeared unimaginable solely a couple of years in the previous.

Start implementing these methods systematically, measure your outcomes always, and therefore proceed learning because the sector evolves. The funding you make in rising these experience right now pays dividends for years to get back as AI turns into an increasingly integral a half of expert and therefore creative work.

Take Action Now: Choose one method from this data that aligns alongside together with your current desires and therefore implement it this week. Document your outcomes, measure the enhancements, and therefore steadily enhance your prompt engineering toolkit. The future of AI collaboration begins alongside together with your subsequent prompt.

Author Bio: This full data was developed by the use of in depth evaluation of current most interesting practices in prompt engineering, enterprise case analysis, and therefore expert practitioner insights. The methods launched have been examined and therefore validated all through quite a lot of AI platforms and therefore make use of circumstances all via 2025.