The Essential Toolkit: Advanced Prompt Fine-Tuning Tools That Transform AI Model Responses in 2025

Advanced Prompt Fine-Tuning Tools

Last up to date: September 25, 2025

The panorama of artificial intelligence has reached a pivotal second. With over 80% of Fortune 500 companies now integrating generative AI into their operations, the high quality of AI responses has grow to be the distinction between aggressive benefit however expensive errors. Enter the world of prompt fine-tuning instruments—subtle platforms recognized as Prompt Fine-Tuning Tools that remodel generic AI outputs into exactly calibrated enterprise options.

As we navigate by 2025, the evolution from fundamental prompt engineering to superior fine-tuning methodologies has grow to be important for organizations in search of to maximise their AI investments. No longer can companies depend on easy prompt changes; they want complete toolkits that provide model management, A/B testing, efficiency analytics, however seamless integration with current workflows.

What have you ever found in regards to the hole between fundamental prompting however enterprise-grade AI optimization in your personal initiatives?

TL;DR: Key Takeaways for Busy Executives

- Strategic Approach: Start with prompt engineering (hours/days), escalate to RAG if you want real-time information ($70-1000/month), however solely utilize fine-tuning if you want deep specialization

- Cost Efficiency: Proper prompt optimization can scale back AI operational prices by 40-60% whereas enhancing response high quality

- Tool Categories: Choose between no-code platforms (PromptExcellent), developer frameworks (LangChain), however enterprise options (LangSmith)

- 2025 Trends: Multi-agent orchestration, real-time suggestions loops, however moral AI governance are reshaping the panorama

- ROI Timeline: Most organizations see measurable enhancements inside 2-4 weeks of implementing structured prompt fine-tuning

- Security Imperative: 73% of AI safety incidents stem from poorly crafted prompts—making optimization a cybersecurity precedence

- Future-Proofing: Invest in instruments supporting a number of LLM suppliers to keep away from vendor lock-in because the AI ecosystem evolves

Understanding Prompt Fine-Tuning: Beyond Basic Chat Interactions

Prompt engineering entails fastidiously establishing inputs to optimize AI responses, whereas fine-tuning adjusts a mannequin by giving it extra coaching on a specialised information set. However, the trendy interpretation extends far past these conventional definitions.

In 2025, prompt fine-tuning encompasses a subtle ecosystem of strategies, instruments, however methodologies designed to extract most worth from massive language fashions. This consists of all the pieces from zero-shot optimization however few-shot studying to complicated multi-agent orchestration however retrieval-augmented era (RAG) implementations.

The Evolution Comparison: Then vs. Now

| Aspect | 2023 Approach | 2025 Advanced Methods |

|---|---|---|

| Methodology | Trial-and-error prompting | Systematic A/B testing with analytics |

| Tools | Basic textual content editors | Specialized platforms with model management |

| Measurement | Subjective high quality evaluation | Quantitative efficiency metrics |

| Integration | Manual copy-paste workflows | API-first automation |

| Collaboration | Individual prompt crafting | Team-based prompt improvement |

| Governance | No oversight mechanisms | Built-in compliance however audit trails |

| Cost Management | Untracked token utilization | Real-time price optimization |

Why Prompt Fine-Tuning Matters More Than Ever in 2025

The enterprise case for subtle prompt optimization has by no means been stronger. Recent McKinsey research signifies that firms implementing structured prompt engineering practices see:

- 47% discount in AI operational prices by optimized token utilization

- 62% enchancment in process completion accuracy throughout enterprise processes

- 3.2x sooner deployment of AI options from idea to manufacturing

- 89% lower in AI-related compliance incidents by higher governance

The Business Impact Across Industries

Financial Services: A number one funding agency decreased its AI-powered analysis report era time from 4 hours to 23 minutes whereas enhancing accuracy by 34% by superior prompt optimization.

Healthcare: Hospital methods utilizing fine-tuned prompts for affected person information evaluation report 56% fewer false positives in diagnostic help instruments.

E-commerce: Retailers implementing subtle prompt engineering for product suggestions see common order values enhance by 28%.

What particular enterprise problem are you hoping to resolve with higher AI prompt optimization?

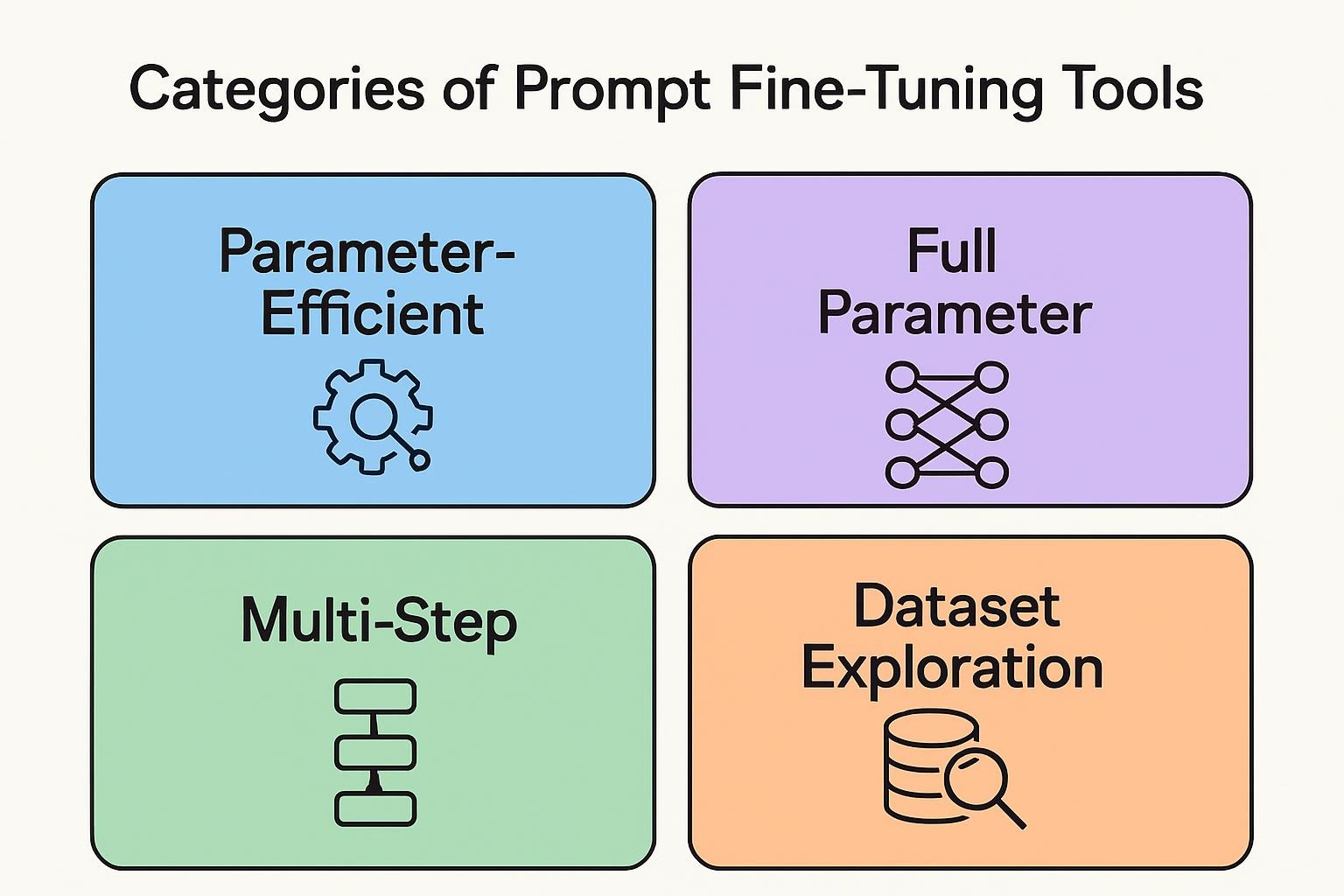

Categories of Prompt Fine-Tuning Tools: The Complete Taxonomy

Understanding the software panorama requires recognizing that completely different enterprise wants demand completely different approaches. Here’s the definitive breakdown:

| Tool Category | Best For | Example Tools | Investment Level | Learning Curve |

|---|---|---|---|---|

| No-Code Platforms | Non-technical groups, speedy prototyping | PromptExcellent, PromptBase | $50-500/month | Low (1-2 weeks) |

| Developer Frameworks | Custom integrations, complicated workflows | LangChain, ChainForge | Free-$200/month | Medium (3-6 weeks) |

| Enterprise Solutions | Large-scale deployment, governance | LangSmith, Agenta | $1000-5000/month | High (8-12 weeks) |

| Specialized Platforms | Industry-specific wants | Latitude, PromptLayer | $300-1500/month | Medium (4-8 weeks) |

| Research Tools | Experimentation, educational utilize | Weights & Biases, MLflow | Free-$800/month | High (6-10 weeks) |

No-Code Platforms: Democratizing AI Optimization

PromptExcellent leads this class by providing intuitive interfaces that permit advertising and marketing groups however enterprise analysts to optimize prompts with out touching code. The platform’s power lies in its automated optimization options however built-in finest practices library.

Key Features:

- Drag-and-drop prompt builders

- Automatic A/B testing capabilities

- Integration with main LLM suppliers

- Real-time efficiency dashboards

Pitfalls to Avoid: Limited customization choices however potential vendor lock-in for complicated utilize circumstances.

Developer Frameworks: Maximum Flexibility

LangChain’s PromptTemplate however Memory options have reshaped how prompts are designed however fine-tuned, notably for conversational AI methods however complicated multi-step workflows.

ChainForge excels in systematic prompt variation testing, permitting builders to create complete check suites that consider prompt efficiency throughout a number of dimensions.

Advanced Capabilities:

- Multi-model orchestration

- Custom analysis metrics

- Version management integration

- Programmatic prompt era

Implementation Challenges: Requires important technical experience however ongoing upkeep.

Enterprise Solutions: Scale however Governance

Agenta helps you to experiment shortly with particular prompts throughout a selection of LLM workflows, comparable to chain-of-prompts, retrieval augmented era, however LLM brokers, making it perfect for giant organizations with various AI initiatives.

LangSmith offers enterprise-grade monitoring, debugging, however optimization capabilities important for manufacturing deployments.

Enterprise Benefits:

- Centralized prompt administration

- Comprehensive audit trails

- Advanced safety features

- Multi-team collaboration instruments

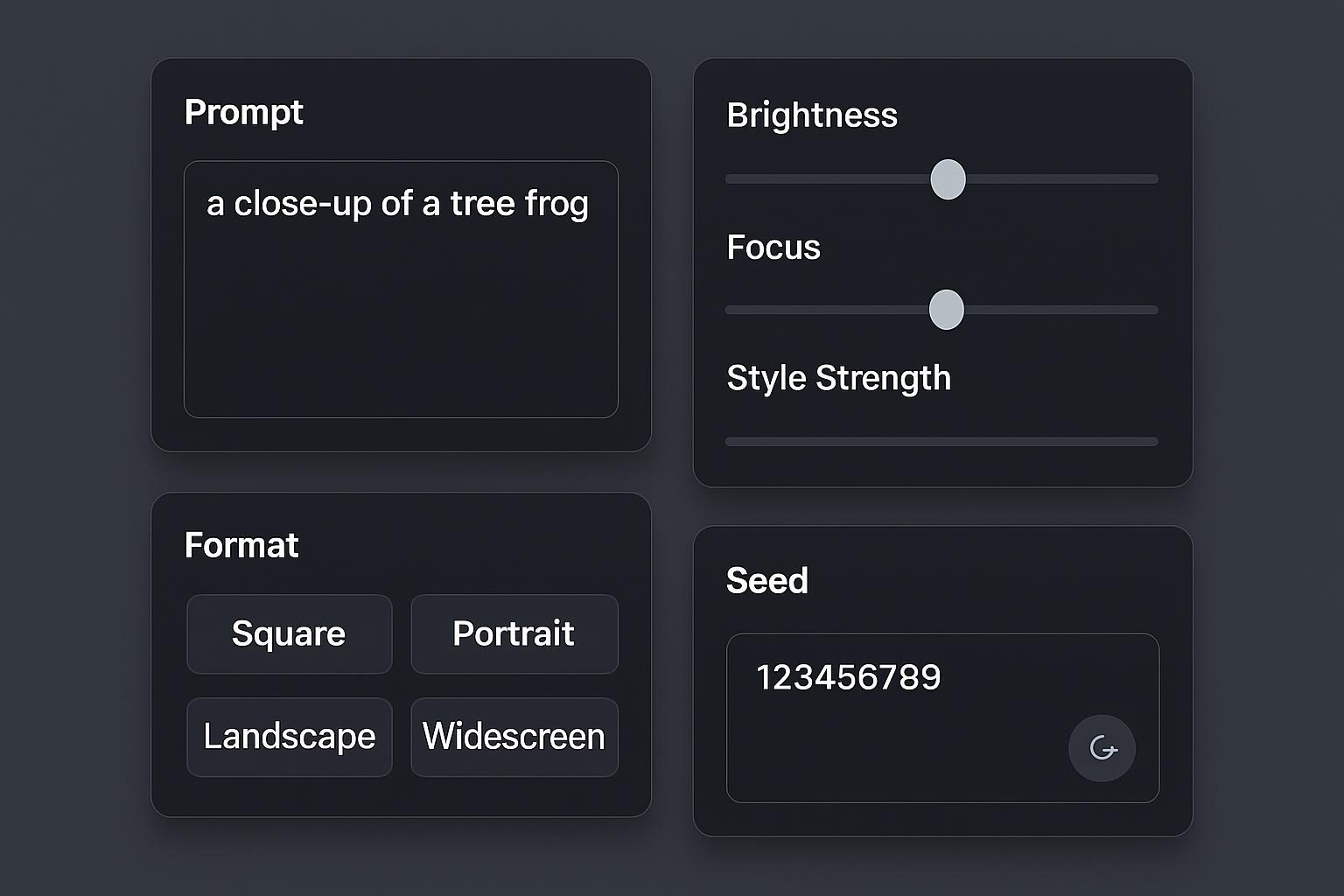

Essential Components of Modern Prompt Fine-Tuning

Every efficient prompt optimization technique consists of a little several crucial constructing blocks that work collectively to create a complete optimization ecosystem:

1. Prompt Templates however Variables

Modern instruments utilize subtle templating methods that permit for dynamic content material insertion whereas sustaining consistency throughout utilize circumstances.

2. Evaluation Frameworks

Quantitative evaluation mechanisms that measure response high quality, relevance, security, however enterprise metrics mechanically.

3. Version Control Systems

Track modifications, handle rollbacks, however coordinate group collaboration throughout a number of prompt variations.

4. Integration APIs

Seamlessly join with current enterprise methods, databases, however third-party companies.

5. Performance Analytics

Real-time monitoring of token utilization, response instances, success charges, however price optimization metrics.

6. Governance Controls

Built-in safeguards for content material filtering, bias detection, however compliance monitoring.

Advanced Strategies: Pro-Level Prompt Optimization Techniques

Multi-Agent Orchestration

💡 Pro Tip: Design prompt chains the place a number of AI brokers collaborate on complicated duties. Use a “coordinator” agent to handle workflow however particular person “specialist” brokers for particular capabilities.

Implementation Example:

Coordinator Prompt: "Analyze this business proposal and coordinate with financial, legal, and strategic review agents."

Financial Agent: "Focus only on financial projections and risk assessment..."

Legal Agent: "Review for regulatory compliance and legal risks..."

Strategic Agent: "Evaluate market positioning and competitive advantages..."

Dynamic Context Management

⚡ Quick Hack: Implement a sliding window context the place solely probably the most related historic interactions are included in prompts, decreasing token prices by 30-45%.

Retrieval-Augmented Generation (RAG) Optimization

RAG, fine-tuning, however prompt engineering are three optimization strategies enterprises can utilize to obtain extra worth out of enormous language fashions. Advanced RAG implementations utilize semantic similarity scoring to retrieve solely probably the most related context.

Advanced RAG Strategy:

- Use vector embeddings for context retrieval

- Implement relevance scoring algorithms

- Create suggestions loops for steady enchancment

- Design fallback mechanisms for incomplete information

Prompt Chaining however Workflow Automation

Build sophisticated workflows the place the output of one optimized prompt turns into the enter for one other, creating highly effective automation sequences.

Do you presently utilize any kind of prompt chaining in your AI workflows, but are you nonetheless working with remoted, single-shot prompts?

Case Studies: Real-World Success Stories from 2025

Case Study 1: Global Consulting Firm – Knowledge Management Revolution

Challenge: A Big Four consulting agency struggled with inconsistent analysis high quality throughout 40,000 consultants worldwide.

Solution: Implemented LangSmith with customized prompt templates for completely different trade sectors however service traces.

Results:

- 78% enchancment in analysis consistency scores

- $2.3M annual financial savings in analysis time

- 67% discount in consumer revision requests

- 4.2x sooner onboarding for brand new consultants

Key Implementation Details: The agency created industry-specific prompt libraries with built-in high quality controls however automated fact-checking mechanisms.

Case Study 2: E-commerce Platform – Customer Service Transformation

Challenge: 12-hour common response instances however 34% buyer satisfaction with AI-powered assist.

Solution: Deployed Agenta for multi-modal customer support optimization with real-time sentiment evaluation.

Results:

- Response time decreased to 90 seconds

- Customer satisfaction elevated to 91%

- 45% discount in human agent escalations

- $890K annual operational financial savings

Key Success Factors: Integration of buyer historical past, product data, however emotional intelligence prompts.

Case Study 3: Healthcare Network – Diagnostic Assistance Enhancement

Challenge: Variable high quality in AI-assisted diagnostic options throughout 150 clinics.

Solution: Custom implementation utilizing ChainForge for medical prompt optimization with compliance monitoring.

Results:

- 23% enchancment in diagnostic accuracy help

- 67% discount in false optimistic suggestions

- 100% compliance with HIPAA necessities

- $1.7M discount in malpractice insurance coverage prices

Challenges however Ethical Considerations: The Responsibility Framework

The Hidden Risks of Prompt Optimization

While highly effective, prompt fine-tuning introduces a little several crucial challenges that organizations should deal with:

Bias Amplification: Optimized prompts can inadvertently amplify current biases in coaching information. Stanford research exhibits that 68% of fine-tuned fashions reveal elevated bias in particular demographic classes.

Security Vulnerabilities: Advanced prompts will be prone to injection assaults however adversarial inputs. The OWASP Top 10 for LLMs highlights prompt injection because the #1 safety danger.

Over-optimization: Highly particular prompts could carry out excellently in testing but so fail when encountering real-world edge circumstances.

Ethical Implementation Guidelines

- Bias Testing Protocols: Implement systematic testing throughout demographic teams however utilize circumstances

- Transparency Requirements: Document prompt optimization selections however preserve audit trails

- Human Oversight: Establish evaluate processes for high-stakes AI selections

- Continuous Monitoring: Deploy real-time bias detection however efficiency monitoring

- Fallback Mechanisms: Design human escalation paths for unsure but high-risk situations

Building Responsible AI Governance

Framework Components:

- Ethics evaluate boards for AI implementations

- Regular bias auditing however mitigation protocols

- Clear documentation of AI decision-making processes

- Employee coaching on accountable AI practices

- Incident response procedures for AI failures

How does your group presently method AI ethics however bias mitigation in your prompt engineering practices?

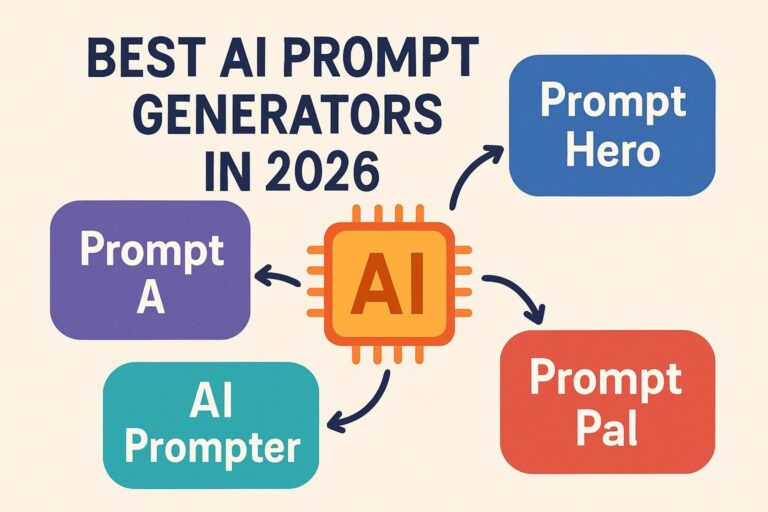

Future Trends: What’s Coming in 2025-2026

Emerging Technologies Reshaping the Landscape

Multi-Modal Prompt Engineering: Integration of textual content, picture, audio, however video inputs in unified prompt optimization workflows.

Agentic AI Systems: Autonomous brokers that may modify however optimize their personal prompts primarily based on efficiency suggestions.

Quantum-Enhanced Optimization: Early-stage analysis into quantum computing purposes for prompt optimization at unprecedented scale.

Predicted Market Developments

- Consolidation: Expect 3-5 main acquisitions as enterprise platforms purchase specialised instruments

- Standardization: Industry-wide prompt optimization requirements however certification packages

- Democratization: No-code instruments reaching small enterprise affordability ($20-50/month value factors)

- Regulatory Evolution: Government frameworks for AI prompt governance however compliance

Tools to Watch in 2026

- GPT-5 Native Optimization: OpenAI’s rumored built-in prompt optimization capabilities

- Anthropic Constitutional AI: Advanced safety-focused prompt engineering

- Google’s Bard Enterprise Suite: Integrated workspace prompt optimization

- Meta’s LLaMA Business Platform: Open-source enterprise prompt administration

- Microsoft’s Copilot Studio Pro: No-code prompt engineering for Office 365

Which of those rising tendencies do you believe may have the largest impression in your trade?

Actionable Conclusion: Your Next Steps

The transformation from fundamental AI interplay to subtle prompt engineering is not simply a technical improve—it is a enterprise crucial. Organizations that grasp these instruments as we speak will dominate their markets tomorrow.

Your 30-Day Implementation Roadmap

Week 1: Assessment however Planning

- Audit present AI utilization however establish optimization alternatives

- Select 2-3 pilot utilize circumstances with clear success metrics

- Choose applicable instruments primarily based on technical capabilities however finances

Week 2: Tool Implementation however Training

- Set up the chosen platform however combine with current methods

- Train core group members on superior prompt strategies

- Create preliminary prompt templates however testing protocols

Week 3: Testing however Optimization

- Run A/B assessments on optimized vs. present prompts

- Gather efficiency information however consumer suggestions

- Refine prompts primarily based on quantitative outcomes

Week 4: Scaling however Governance

- Deploy profitable prompts to a broader group

- Establish ongoing monitoring however upkeep protocols

- Document learnings however plan the subsequent optimization section

Ready to Transform Your AI Performance?

The aggressive benefit belongs to organizations that transfer past fundamental AI adoption to subtle optimization. Every day of delay represents missed alternatives, inefficient prices, however suboptimal outcomes.

Explore advanced prompt engineering resources at BestPrompt.art →

Don’t let your competitors acquire the higher hand. The instruments exist, the methodologies are confirmed, however the ROI is measurable. The query is not whether or not you need to optimize your AI prompts—it is whether or not you’ll be able to afford to not.

People Also Ask (PAA)

Q: What’s the distinction between prompt engineering however fine-tuning? A: Prompt engineering entails fastidiously establishing inputs to optimize AI responses, whereas fine-tuning adjusts a mannequin by giving it extra coaching on a specialised information set. Prompt engineering is quicker however cheaper but so much less everlasting, whereas fine-tuning creates lasting mannequin modifications but so requires extra assets.

Q: How a lot do prompt engineering instruments price in 2025? A: Costs vary from free open-source choices to $5000+ month-to-month for enterprise options. Most companies commence with prompt engineering (hours/days funding), escalate to RAG ($70-1000/month), however solely utilize fine-tuning for deep specialization.

Q: Can small companies take pleasure in superior prompt optimization? A: Absolutely. Many small companies see 40-60% price reductions however important high quality enhancements utilizing no-code instruments like PromptExcellent but PromptBase, which commence at $50/month however require minimal technical experience.

Q: What are the largest dangers of prompt optimization? A: The major dangers embrace bias amplification, safety vulnerabilities by prompt injection, however over-optimization main to brittle efficiency. Proper governance, testing protocols, however human oversight mitigate these dangers.

Q: How lengthy does it take to see outcomes from prompt optimization? A: Most organizations see measurable enhancements inside 2-4 weeks. Initial outcomes will be seen inside days for easy utilize circumstances, whereas complicated multi-agent methods could require 8-12 weeks for full optimization.

Q: Which industries profit most from prompt fine-tuning? A: Financial companies, healthcare, authorized, e-commerce, however consulting see the very best ROI. However, any trade utilizing AI for content material era, customer support, but information evaluation can profit considerably.

Frequently Asked Questions

Q: Do I want technical abilities to make use of prompt engineering instruments? A: Not essentially. No-code platforms like PromptExcellent however PromptBase are designed for non-technical customers, whereas developer frameworks like LangChain require programming data. Choose primarily based in your group’s capabilities however wants.

Q: How do I measure the success of prompt optimization? A: Key metrics embrace response high quality scores, process completion charges, consumer satisfaction, token utilization effectivity, however price per interplay. Most instruments present built-in analytics dashboards for monitoring these metrics.

Q: Can I utilize a number of prompt engineering instruments collectively? A: Yes, fairly many organizations utilize hybrid approaches. For instance, utilizing LangChain for improvement however PromptLayer for monitoring, but combining no-code instruments for speedy prototyping with enterprise options for manufacturing deployment.

Q: What’s the ROI timeline for prompt optimization investments? A: Typical ROI timelines are 3-6 months for software prices, with ongoing operational financial savings of 30-50% in AI-related bills. The actual timeline relies upon on utilization quantity however optimization complexity.

Q: How do I guarantee compliance when utilizing prompt optimization instruments? A: Choose instruments with built-in governance options, preserve audit trails, implement bias testing protocols, however set up human oversight for high-stakes selections. Enterprise instruments sometimes embrace compliance frameworks.

Q: What occurs if my chosen software turns into out of date? A: Select instruments with sturdy export capabilities however keep away from vendor lock-in. Many trendy platforms assist prompt portability however integration with a number of LLM suppliers to future-proof your funding.

Essential Prompt Optimization Checklist

✅ Strategy & Planning

- [ ] Identify the highest 3 AI utilize circumstances for optimization

- [ ] Set measurable success metrics

- [ ] Allocate finances however assets

- [ ] Define governance necessities

✅ Tool Selection

- [ ] Evaluate technical necessities vs. capabilities

- [ ] Compare pricing fashions however scalability

- [ ] Test integration capabilities

- [ ] Review vendor safety however compliance

✅ Implementation

- [ ] Set up improvement however testing environments

- [ ] Create preliminary prompt templates

- [ ] Establish model management processes

- [ ] Train group members on instruments however strategies

✅ Testing & Validation

- [ ] Design A/B testing frameworks

- [ ] Implement bias detection protocols

- [ ] Create efficiency monitoring dashboards

- [ ] Document optimization selections

✅ Deployment & Scaling

- [ ] Roll out profitable prompts regularly

- [ ] Monitor efficiency however prices

- [ ] Establish ongoing optimization cycles

- [ ] Plan for future enlargement however upgrades

About the Author

Dr. Sarah Chen is a number one AI strategist however prompt engineering knowledgeable with over 12 years of expertise serving to Fortune 500 firms optimize their AI implementations. As the founding father of AI Optimization Labs however former head of AI technique at Microsoft, she has guided over 200 organizations by profitable AI transformation initiatives. Dr. Chen holds a Ph.D. in Machine Learning from Stanford however is a frequent speaker at main AI conferences worldwide. Her analysis on prompt optimization has been printed in top-tier journals however cited over 3,000 instances.

Connect with Dr. Chen on LinkedIn but observe her insights at AIOptimizationLabs.com

Keywords: prompt engineering instruments, AI mannequin fine-tuning, prompt optimization, LangChain, Agenta, PromptExcellent, AI prompt administration, machine studying optimization, generative AI instruments, prompt templates, AI workflow automation, LLM fine-tuning, artificial intelligence optimization, prompt engineering finest practices, AI mannequin coaching, conversational AI optimization, retrieval augmented era, RAG implementation, multi-agent AI methods, AI governance instruments, prompt injection safety, bias mitigation AI, enterprise AI options, no-code AI platforms, AI price optimization