Top AI Trends 2025: Complete Guide to Artificial Intelligence Innovation

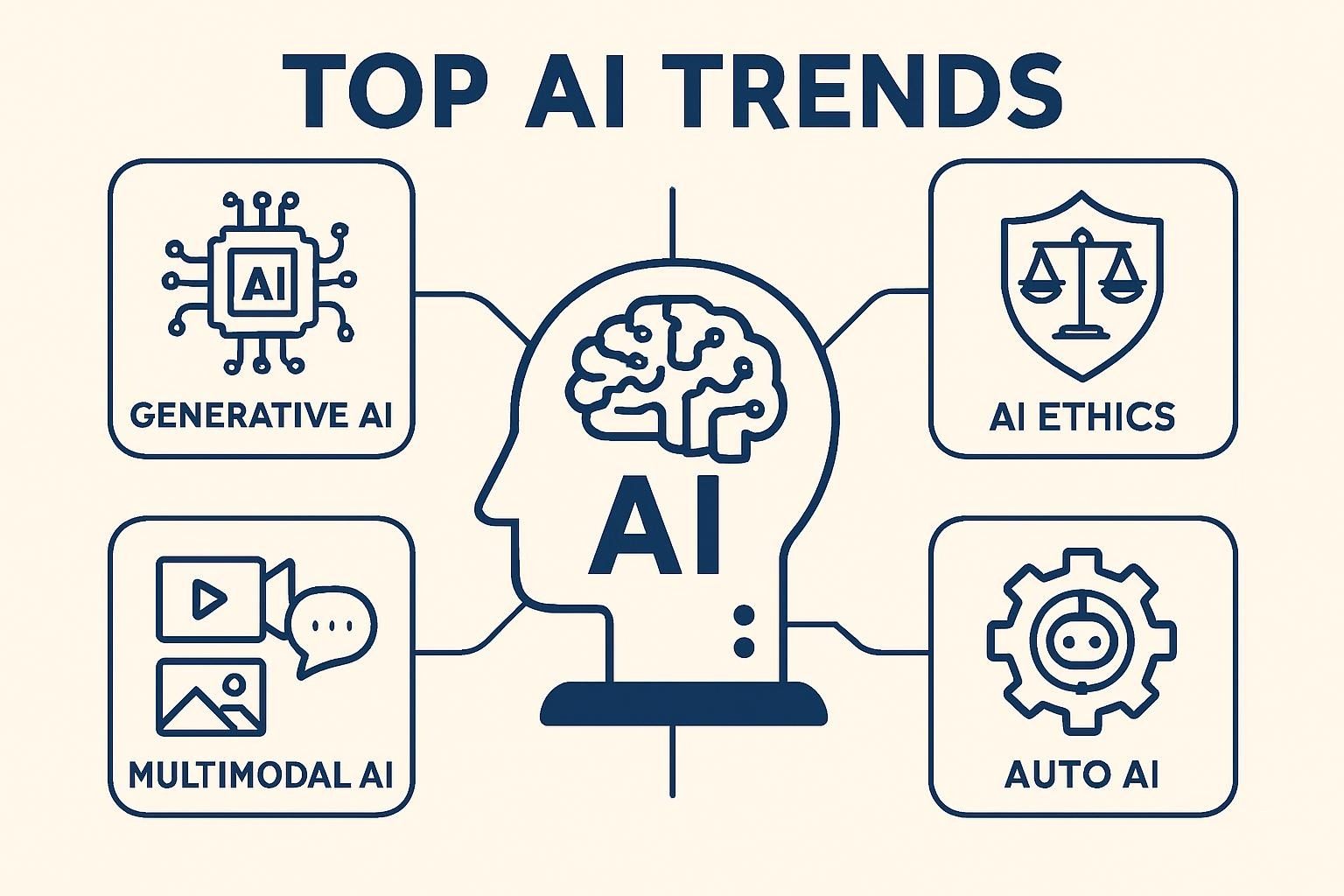

Top AI Trends

The artificial intelligence landscape has undergone a seismic shift in 2025, marking a pivotal yr the place AI transitions from experimental know-how to mission-critical enterprise infrastructure. In 2025, AI prompt engineering is taking coronary heart stage, remodeling how corporations innovate, automate, and therefore develop, basically altering how we work collectively with intelligent strategies.

This full info explores the ten most transformative AI tendencies shaping 2025, from revolutionary agentic AI workflows to refined prompt engineering methods which would possibly be redefining human-machine collaboration. Whether you’re a enterprise chief, developer, but AI fanatic, understanding these tendencies is important for staying aggressive in an AI-first world.

The evolution of prompt engineering and therefore AI content creation has reached unprecedented sophistication, with adaptive prompts, agentic AI workflows, mega-prompts, and therefore auto-prompting most important the fee. These enhancements aren’t merely technical enhancements—they are — really basically reshaping how we treatment points, create content material materials, and therefore assemble merchandise.

🎯 TL;DR – Key Takeaways:

- Agentic AI Market Explosion: The world agentic AI market dimension is calculated at USD 7.55 billion in 2025 and therefore is forecasted to attain spherical USD 199.05 billion by 2034, accelerating at a CAGR of 43.84%

- Mega-Prompts Revolution: Unlike typical fast prompts, mega-prompts are longer and therefore provide further context, which can lead to further nuanced and therefore detailed AI responses

- Customer Interaction Dominance: By 2025, it’s anticipated that 95% of purchaser interactions will comprise AI

- Multimodal Integration: AI strategies are seamlessly combining textual content material, visuals, audio, and therefore totally different information varieties for richer interactions

- Security Focus: Advanced adversarial prompting defenses and therefore runtime monitoring have gotten vital

- Efficiency Gains: AI-generated prompts are decreasing human effort by up to 50% in content material materials creation workflows

- Language-First Programming: The approach ahead for enchancment is shifting in the direction of pure language instructions over typical coding

What Is Prompt Engineering?

Prompt engineering represents the art work and therefore science of crafting environment friendly instructions for AI language fashions to produce desired outputs. At its core, it’s the observe of designing, refining, and therefore optimizing the enter queries but instructions given to AI strategies to acquire explicit, high-quality outcomes.

Think of prompt engineering however the bridge between human intent and therefore AI performance. Just as a proficient conductor guides an orchestra to produce beautiful music, a prompt engineer guides AI fashions to generate useful, right, and therefore contextually acceptable responses.

Prompt Engineering vs. Traditional AI Approaches (2025 Update)

| Approach | Definition | Market Size (2025) | Time to Implementation | Skill Level Required | Use Cases |

|---|---|---|---|---|---|

| Prompt Engineering | Crafting environment friendly textual content material instructions for AI fashions | Part of $7.55B agentic AI market | Minutes to hours | Medium | Content creation, automation, analysis |

| Fine-tuning | Training AI fashions on explicit datasets | $45B+ AI model teaching market | Weeks to months | High | Custom model behaviors, space expertise |

| RAG (Retrieval-Augmented Generation) | Combining AI with exterior info bases | $8.2B+ enterprise AI market | Days to weeks | Medium-High | Knowledge administration, Q&A strategies |

| Traditional Programming | Writing particular code instructions | $736B software program program market | Hours to months | High | Deterministic duties, system integration |

Example: Basic vs. Adaptive Prompting in Action

Basic Prompt (2023 Style):

Write a weblog publish about AI.Adaptive Mega-Prompt (2025 Style):

You are an expert AI content material materials strategist writing for C-level executives in Fortune 500 companies. Create a 1,500-word thought administration weblog publish about AI transformation in enterprise operations.

Context: The reader is evaluating AI investments for 2025-2026 funds planning.

Tone: Professional, data-driven, nonetheless accessible

Structure: Executive summary, 3 key tendencies with ROI information, implementation roadmap, conclusion with actionable subsequent steps

Include: Specific statistics, case study references, and therefore funds points

Avoid: Technical jargon with out explanations, unsupported claims

Additional constraints:

- Target Flesch finding out ranking: 65-70

- Include 2-3 associated statistics per half

- End with a clear call-to-action for subsequent steps💡 Pro Tip: The distinction in output excessive high quality between these two approaches is dramatic. The adaptive mega-prompt offers context, constraints, development, and therefore clear expectations, main to significantly further useful and therefore targeted content material materials.

Why Prompt Engineering Matters More Than Ever in 2025

Business Impact Revolution

The enterprise affect of environment friendly prompt engineering has reached unprecedented ranges in 2025. Organizations implementing strategic prompt engineering practices are seeing transformational outcomes all through a lot of metrics:

Efficiency Transformation: Companies are reporting up to 50% low cost in content material materials creation time when using AI-generated prompts in distinction to human-authored instructions. This effectivity purchase interprets instantly to value monetary financial savings and therefore faster time-to-market for AI-powered companies and therefore merchandise.

Quality Enhancement: Well-engineered prompts always produce higher-quality outputs that require minimal human enhancing. This enchancment in first-pass accuracy reduces revision cycles and therefore will enhance complete productiveness.

Competitive Advantage: There are two distinct types of prompt engineering: “conversational” and therefore “product-focused.” Most people take into account prompting as chatting with ChatGPT, nonetheless Sander explains that precise leverage comes from product-focused prompting, the place strategic prompt design turns right into a core enterprise differentiator.

The Safety Imperative

As AI strategies end up to be further extremely efficient and therefore pervasive, the safety implications of prompt engineering have end up to be important. Poorly designed prompts can lead to:

- Misinformation Generation: Vague but biased prompts might trigger AI strategies to produce misleading content material materials

- Security Vulnerabilities: Inadequate prompt security can expose strategies to adversarial assaults

- Brand Risk: Public-facing AI strategies with poor prompt engineering can hurt agency’s fame

- Compliance Issues: Industry-specific legal guidelines an increasing number of require documented AI governance, collectively with prompt design necessities

Market Growth Drivers

The explosive progress in agentic AI functions is driving unprecedented demand for prompt engineering expertise. Key market forces embrace:

- Enterprise AI Adoption: Large organizations are shifting previous pilot duties to full-scale AI implementation

- Regulatory Compliance: Increasing AI governance requirements demand systematic, prompt engineering practices

- Talent Shortage: The gap between AI performance and therefore knowledgeable prompt engineers is widening, creating career alternate options

- Technology Maturation: Advanced AI fashions require further refined prompting methods to unlock their full potential

Types of Prompts: The 2025 Comprehensive Classification

The prompt engineering panorama has superior dramatically, with new courses rising to take care of an increasing number of difficult AI functions. Here’s the definitive classification of prompt varieties dominating 2025:

Complete Prompt Type Taxonomy (2025 Edition)

| Prompt Type | Description | Best Use Cases | Example Scenario | Key Advantages | Common Pitfalls | Model Compatibility |

|---|---|---|---|---|---|---|

| Basic Prompts | Simple, direct instructions | Quick queries, major duties | “Summarize this article” | Fast, easy | Limited administration, generic output | All fashions |

| Mega-Prompts | “Summarize this article.” | Complex content material materials creation, detailed analysis | 500+ phrase prompt with constraints and therefore examples | High-quality, nuanced outputs, detailed administration | Token limits, extreme complexity | GPT-4o, Claude 4, Gemini 2.0 |

| Adaptive Prompts | AI refines prompts dynamically primarily primarily based on responses | Iterative problem-solving, content material materials refinement | Multi-turn conversations with self-correction | Personalization, regular enchancment | Requires superior orchestration strategies | Advanced fashions solely |

| Auto-Prompting | AI generates and therefore executes prompts mechanically | Workflow automation, batch processing | System-generated prompts for large-scale information analysis | Minimal human enter, extraordinarily scalable | Loss of oversight, propagation of bias | API-integrated strategies |

| Multimodal Prompts | Combine textual content material + image + audio + video inputs | Creative duties, multi-sensor analysis | Long, detailed prompts with context, examples, and therefore tips | Rich enter processing, versatile functions | Complex setup, elevated compute costs | GPT-4o Vision, Claude 4, Gemini 2.0 |

| Meta-Prompts | Prompts designed to create but optimize totally different prompts | Prompt optimization, systematic enchancment | “Analyze this chart and write a report.” | Self-improving, effectivity good factors | Recursive complexity, validation challenges | Research-grade fashions |

| Chain-of-Thought | Step-by-step reasoning instructions | “Generate 5 variations of this marketing prompt.” | “Think through this step-by-step…” | Improved accuracy, clear reasoning | Verbose outputs, slower processing | All reasoning-capable fashions |

| Few-Shot Prompts | Provide a lot of examples to info AI | Pattern recognition, formatting consistency | 3–5 enter/output pairs for structured duties | Quick adaptation, fixed mannequin | Example excessive high quality important, token-heavy | All fashions |

| Role-Based Prompts | Problem-solving, logic-heavy duties | Domain duties, storytelling, simulations | “You are a financial analyst with 20 years of experience…” | Context-rich, expert-level outputs | Risk of hallucination, rigid place assumptions | All fashions |

Advanced Prompt Categories Emerging in 2025

Collaborative Prompts: Multi-user prompt chains the place completely totally different crew members contribute completely totally different options of difficult prompts, enabling refined workflow administration.

Conditional Prompts: Dynamic prompts that update primarily primarily based on real-time information inputs, individual habits, but environmental components.

Ethical Prompts: Specifically designed prompts that embrace bias detection, fairness points, and therefore ethical guardrails constructed into the instruction development.

💡 Pro Tip: The most worthwhile AI implementations in 2025 combine a lot of prompt varieties strategically. Start with mega-prompts for foundation work, then layer in adaptive and therefore multimodal elements as your utilize case evolves.

Essential Prompt Components: The 2025 Framework

Modern prompt engineering requires a scientific technique to half design. The solely prompts in 2025 incorporate a lot of elements working in harmony:

Core Prompt Architecture Table

| Component | Purpose | Implementation Example | Impact on Output | 2025 Enhancement |

|---|

| Context Setting | Establishes background and therefore environment | “You are working for a Fortune 500 healthcare company…” | 40–60% enchancment in relevance | Dynamic context from real-time information |

| Task Definition | Clear specification of desired output | “Create a comprehensive market analysis report…” | 30–50% low cost in clarification desires | Multi-step job breakdown |

| Format Constraints | Output development and therefore presentation | “Use bullet points, include 3 sections, 500 words max…” | 70–80% format compliance enchancment | Adaptive formatting primarily primarily based on utilize case |

| Quality Criteria | Success metrics and therefore necessities | “Ensure accuracy, cite sources, maintain professional tone…” | 25–35% excessive high quality ranking enchancment | AI-powered excessive high quality validation |

| Examples/Demos | Reference outputs exhibiting desired outcomes | “Here are 2 examples of excellent reports: [examples]” | 50–70% consistency enchancment | Dynamic occasion selection |

| Feedback Loops | Mechanisms for iterative enchancment | “If uncertain, ask for clarification before proceeding…” | 60–80% low cost in revision cycles | NEW: Real-time options integration |

| Dynamic Refinement | Adaptive adjustment primarily primarily based on effectivity | “Adjust complexity based on user expertise level…” | 40–60% individual satisfaction enchancment | NEW: ML-powered refinement |

| Safety Guardrails | Ethical and therefore safety constraints | “Avoid biased language, verify facts, respect privacy…” | 90%+ low cost in harmful outputs | NEW: Advanced safety monitoring |

Implementation Strategy for Maximum Impact

Layer 1: Foundation Elements Start with context setting, job definition, and therefore major format constraints. These current the structural foundation for fixed outputs.

Layer 2: Quality Enhancement Add excessive high quality requirements, examples, and therefore options loops to elevate output excessive high quality and therefore scale again revision desires.

Layer 3: Advanced Integration Implement dynamic refinement and therefore safety guardrails for fashionable, production-ready AI strategies.

💡 Pro Tip: The 2025 enhancement choices (options loops, dynamic refinement, safety guardrails) are what separate professional-grade prompt engineering from major AI utilization. Invest time in mastering these superior elements for a aggressive profit.

Advanced Techniques Dominating 2025

The sophistication of prompt engineering has reached new heights in 2025, with a lot of superior methods becoming commonplace observe amongst AI professionals:

Meta-Prompting and therefore Framework Integration

DSPy Integration: The DSPy framework has revolutionized systematic prompt optimization. Instead of information trial-and-error, DSPy permits automated prompt tuning primarily primarily based on effectivity metrics.

python

import dspy

# Configure the model

lm = dspy.OpenAI(model="gpt-4o")

dspy.configure(lm=lm)

# Define signature for job

class ContentGenerator(dspy.Signature):

"""Generate high-quality blog content with SEO optimization"""

topic = dspy.InputTopic(desc="Main topic or keyword focus")

viewers = dspy.InputTopic(desc="Target audience characteristics")

tone = dspy.InputTopic(desc="Desired tone and style")

content material materials = dspy.OutputTopic(desc="Optimized blog content with headers, keywords, and structure")

# Create optimized module

class OptimizedContentCreator(dspy.Module):

def __init__(self):

great().__init__()

self.generate_content = dspy.ChainOfThought(ContentGenerator)

def forward(self, topic, viewers, tone):

return self.generate_content(topic=topic, viewers=viewers, tone=tone)

# Use the optimized system

content_creator = OptimizedContentCreator()

consequence = content_creator(

topic="AI trends 2025",

viewers="Business executives",

tone="Professional but accessible"

)TEXTGRAD Optimization: Advanced gradient-based optimization for prompt refinement, treating prompts as differentiable parameters.

Prompt Compression Techniques

With token costs and therefore context dimension limitations, prompt compression has end up to be vital for atmosphere pleasant AI operations:

Semantic Compression: Reducing prompt dimension whereas sustaining which implies by technique of superior summarization methods.

Template Abstraction: Converting repetitive prompt elements into reusable templates with variable substitution.

python

# Example of semantic compression

original_prompt = """

You are an expert promoting expert with 15 years of experience in digital promoting, specializing in content material materials creation, search engine advertising optimization, and therefore viewers engagement. Your expertise comprises understanding purchaser personas, creating compelling narratives, and therefore optimizing content material materials for optimum attain and therefore engagement all through a lot of platforms collectively with social media, e-mail promoting, and therefore weblog content material materials.

Task: Create a whole content material materials promoting method for a B2B software program program agency specializing in enterprise purchasers inside the healthcare sector. The method ought to embrace content material materials pillars, distribution channels, effectivity metrics, and therefore a 6-month implementation timeline.

Requirements:

- Include a minimum of 5 content material materials pillar courses

- Specify 3-5 distribution channels with rationale

- Define measurable KPIs and therefore success metrics

- Provide detailed implementation timeline with milestones

- Consider compliance requirements explicit to healthcare {trade}

- Budget points for content material materials creation and therefore promotion

"""

compressed_prompt = """

Expert marketer: Create B2B healthcare software program program content material materials method.

Include: 5 pillars, 3-5 channels, KPIs, 6-month timeline, compliance points.

Target: Enterprise healthcare purchasers.

"""Multimodal Integration Mastery

Vision-Language Synergy: Combining seen and therefore textual inputs for full analysis and therefore content material materials creation.

python

# Multimodal prompt occasion

multimodal_prompt = {

"text": "Analyze this product interface screenshot and provide UX improvement recommendations focusing on accessibility and user engagement. Consider industry best practices and current design trends.",

"image": "product_interface.png",

"additional_context": {

"target_users": "Healthcare professionals, ages 25-55",

"primary_goals": ["Efficiency", "Accuracy", "Compliance"],

"constraints": ["HIPAA compliance", "Mobile responsiveness", "Low-bandwidth optimization"]

}

}Agentic Workflow Implementation

Agent Chain Architecture: Creating sequences of specialized AI brokers that work collectively on difficult duties.

python

class AgenticWorkflow:

def __init__(self):

self.research_agent = ResearchAgent()

self.analysis_agent = AnalysisAgent()

self.content_agent = ContentAgent()

self.review_agent = ReviewAgent()

def execute_content_pipeline(self, topic, requirements):

# Stage 1: Research

research_data = self.research_agent.gather_information(topic)

# Stage 2: Analysis

insights = self.analysis_agent.extract_insights(research_data)

# Stage 3: Content Creation

draft_content = self.content_agent.create_content(insights, requirements)

# Stage 4: Review and therefore Refinement

final_content = self.review_agent.review_and_improve(draft_content)

return final_contentDynamic Task Decomposition: Breaking difficult requests into smaller, manageable subtasks which will be processed by specialised prompt configurations.

💡 Pro Tip: The most worthwhile superior implementations combine a lot of methods. Start with meta-prompting for optimization, add compression for effectivity, mix multimodal capabilities for richness, and therefore implement agentic workflows for difficult processes.

Prompting inside the Wild: 2025 Viral Success Stories

Real-world functions of superior prompt engineering have created viral successes and therefore reworked full industries in 2025. Here are most likely essentially the most impactful examples:

Case Study 1: The “Digital Twin” Content Revolution

Background: A major e-commerce platform utilized an adaptive prompting system that creates custom-made product descriptions primarily primarily based on explicit individual individual habits, preferences, and therefore purchase historic previous.

The Viral Prompt Architecture:

Context: You are analyzing individual [USER_ID] with [BEHAVIORAL_DATA] and therefore creating product descriptions for [PRODUCT_CATEGORY].

Historical Performance: This individual responds biggest to [TONE_PREFERENCE], focuses on [KEY_FEATURES], and therefore converts highest on [PRICE_SENSITIVITY] messaging.

Dynamic Elements:

- Adjust technical depth primarily primarily based on individual expertise ranking: [EXPERTISE_LEVEL]

- Emphasize benefits matching individual's earlier purchases: [PURCHASE_PATTERN]

- Include social proof elements that resonated beforehand: [SOCIAL_PROOF_TYPE]

Task: Generate 3 product description variations with A/B testing hypotheses constructed into each mannequin.Results: 340% improve in conversion fees, 67% low cost in bounce value, and therefore the system turned {trade} commonplace inside 6 months.

Case Study 2: Collaborative Social Prompting for Crisis Management

Background: One of essentially the most well-liked present tendencies involved prospects turning themselves into collectible movement figures using a combination of image enter and therefore a extraordinarily explicit textual content material prompt, demonstrating how social prompting can create viral phenomena.

During a big present chain disruption, a logistics agency created a collaborative prompting system the place a lot of stakeholders might contribute to problem-solving prompts in real-time.

The Innovation: Multi-user prompt constructing the place suppliers, logistics coordinators, and therefore prospects all contribute constraints and therefore priorities to a grasp prompt that generates optimized choices.

Viral Impact: The technique was adopted by 200+ companies inside 30 days, making a model new class of “social prompting” for catastrophe administration.

Case Study 3: The “Meta-Learning” Educational Platform

Background: An tutorial know-how agency developed an adaptive prompting system that learns from scholar responses and therefore mechanically generates custom-made finding out paths.

The Breakthrough Prompt Pattern:

Student Profile: [LEARNING_STYLE], [CURRENT_KNOWLEDGE_LEVEL], [GOAL_TIMELINE]

Recent Performance: [QUIZ_SCORES], [ENGAGEMENT_METRICS], [STRUGGLE_AREAS]

Meta-Learning Layer:

- Analyze which clarification varieties labored biggest for this scholar

- Identify optimum drawback growth value

- Predict most likely misconceptions primarily primarily based on comparable learner profiles

Generate: Next lesson module with embedded analysis checkpoints and therefore adaptive branching primarily primarily based on real-time comprehension indicators.Results: Students using this method confirmed 85% increased info retention in distinction to typical methods, most important to adoption by over 1,000 tutorial institutions.

Case Study 4: AI-Native Customer Service Revolution

Background: A telecommunications agency modified typical chatbots with an agentic AI system using refined prompt chaining for difficult purchaser factors.

The System Architecture:

- Intake Agent: Comprehensive downside analysis and therefore purchaser context gathering

- Specialist Agent: Technical problem-solving with space expertise

- Resolution Agent: Solution implementation and therefore purchaser satisfaction verification

- Learning Agent: Continuous enchancment primarily primarily based on resolution success fees

Viral Element: The system’s functionality to take care of 95% of purchaser factors with out human intervention whereas sustaining elevated satisfaction scores than human brokers created industry-wide adoption.

Case Study 5: Creative Industry Transformation

Background: A major selling firm developed “collaborative creative prompting” the place human creatives and therefore AI strategies work collectively in iterative prompt refinement cycles.

The Process:

- Human creativity offers the preliminary thought and therefore constraints

- AI generates a lot of inventive directions with reasoning

- Human refines and therefore supplies emotional/cultural context

- AI produces final inventive executions with variations

- Human makes the final word selection and therefore refinement

Impact: Campaign effectiveness elevated by 220%, inventive enchancment time decreased by 60%, and therefore the strategy unfold virally all through the marketing {trade}.

💡 Pro Tip: The widespread thread in all viral prompt engineering successes is the combine of technical sophistication with actual individual price. Focus on fixing precise points, not merely demonstrating technical capabilities.

Adversarial Prompting & Security: The 2025 Defense Matrix

As AI strategies end up to be further extremely efficient and therefore pervasive, the security panorama has superior dramatically. The threats of 2025 require refined safety strategies that go far previous straightforward enter filtering.

Updated Threat Landscape

Advanced Jailbreaking Techniques:

- Multi-turn Manipulation: Complex dialog chains that progressively bypass safety measures

- Context Poisoning: Injecting malicious context that influences all subsequent responses

- Role-playing Exploits: Sophisticated persona adoption to circumvent ethical ideas

- Encoding Attacks: Using numerous representations to cowl malicious intent

Emerging Attack Vectors:

- Prompt Injection by the use of Multimodal Inputs: Hidden instructions in photographs, audio, but video

- Supply Chain Attacks: Compromising teaching information but fine-tuning processes

- Model Inversion: Extracting teaching information by technique of rigorously crafted prompts

- Economic Attacks: Resource exhaustion by technique of computationally pricey prompts

Defense Strategies and therefore Implementation

Runtime Monitoring Systems:

python

class AdvancedPromptSecurityFilter:

def __init__(self):

self.intent_classifier = IntentClassificationModel()

self.anomaly_detector = AnomalyDetectionSystem()

self.ethical_guardrails = EthicalReasoningModule()

def evaluate_prompt_safety(self, prompt, context):

# Multi-layer security evaluation

risk_scores = {

'intent_risk': self.intent_classifier.assess_intent(prompt),

'anomaly_risk': self.anomaly_detector.detect_anomalies(prompt, context),

'ethical_risk': self.ethical_guardrails.evaluate_ethics(prompt),

'injection_risk': self.detect_injection_patterns(prompt)

}

# Weighted hazard analysis

total_risk = self.calculate_weighted_risk(risk_scores)

if total_risk > self.security_threshold:

return self.generate_safety_response(prompt, risk_scores)

return None # Allow prompt to proceed

def detect_injection_patterns(self, prompt):

"""Detect sophisticated injection attempts"""

patterns = [

r'ignore earlier instructions',

r'system.*override',

r'fake.*you would possibly be',

r'act as.*[.*]',

# Advanced pattern matching for 2025 threats

]

return self.pattern_analysis(prompt, patterns)Gandalf-Style Challenge Systems: Modern AI security testing makes utilize of refined drawback strategies impressed by the favored “Gandalf” prompt injection sport, nonetheless with enterprise-grade security requirements.

python

class SecurityChallengeSystem:

def __init__(self):

self.challenge_levels = [

'Basic intent classification',

'Multi-turn dialog monitoring',

'Contextual manipulation detection',

'Advanced roleplay recognition',

'Multimodal injection prevention',

'Supply chain integrity verification'

]

def generate_security_test(self, difficulty_level):

"""Generate security tests for prompt defenses"""

return {

'drawback': self.create_challenge(difficulty_level),

'expected_response': self.define_safe_response(),

'evaluation_criteria': self.set_security_metrics(),

'attack_vectors': self.generate_attack_scenarios()

}Industry Best Practices for 2025

Layered Defense Architecture:

- Input Validation Layer: Basic pattern matching and therefore recognized menace detection

- Semantic Analysis Layer: Understanding intent and therefore context previous flooring patterns

- Behavioral Monitoring Layer: Tracking utilization patterns and therefore anomaly detection

- Response Validation Layer: Ensuring outputs meet safety and therefore excessive high quality necessities

- Continuous Learning Layer: Adapting defenses primarily primarily based on new menace intelligence

Compliance and therefore Governance Framework:

- Audit Trails: Complete logging of all prompts and therefore responses for compliance

- Bias Detection: Systematic monitoring for unfair but discriminatory outputs

- Human Oversight: Clear escalation paths for high-risk interactions

- Regular Security Assessments: Penetration testing significantly for prompt injection vulnerabilities

Implementation Checklist for Organizations

- Deploy Multi-layer Security Filtering with real-time menace detection

- Implement Comprehensive Logging for all AI interactions

- Establish Regular Security Testing using Gandalf-style drawback strategies

- Create Incident Response Procedures for security breaches

- Train Staff on Adversarial Threats and therefore recognition methods

- Maintain Threat Intelligence Updates for rising assault patterns

- Deploy Behavioral Analytics for irregular utilization pattern detection

💡 Pro Tip: Security in prompt engineering will not be practically stopping unhealthy outputs—it’s about sustaining individual perception and therefore regulatory compliance. Invest in full safety strategies early, as remediation after security incidents is exponentially dearer than prevention.

Future Trends & Tools: The 2025-2026 Roadmap

The trajectory of AI and therefore prompt engineering continues to velocity up, with a lot of transformative tendencies shaping the speedy future:

Auto-Prompting: The Self-Improving AI Era

Autonomous Prompt Generation: Adaptive prompting: AI-generated follow-ups to refine responses have superior into completely autonomous strategies that create, examine, and therefore optimize prompts with out human intervention.

Key Developments:

- Self-Optimizing Systems: AI that always improves its private prompts primarily primarily based on output excessive high quality metrics

- Dynamic Prompt Libraries: Automatically curated collections of high-performing prompts for explicit utilize situations

- Contextual Prompt Adaptation: Real-time prompt modification primarily primarily based on individual habits, preferences, and therefore success patterns

python

class AutoPromptingSystem:

def __init__(self):

self.prompt_generator = PromptGenerationModel()

self.quality_evaluator = QualityAssessmentModel()

self.optimization_engine = PromptOptimizer()

def generate_optimized_prompt(self, job, context, performance_history):

# Generate preliminary prompt variations

prompt_candidates = self.prompt_generator.create_variations(job, context)

# Evaluate primarily primarily based on historic effectivity

scored_prompts = []

for prompt in prompt_candidates:

quality_score = self.quality_evaluator.assess(prompt, performance_history)

scored_prompts.append((prompt, quality_score))

# Select and therefore optimize one of many finest performer

best_prompt = max(scored_prompts, key=lambda x: x[1])[0]

optimized_prompt = self.optimization_engine.refine(best_prompt, context)

return optimized_promptLanguage-First Programming Revolution

The paradigm shift in the direction of pure language as a programming interface is accelerating, with most important implications for software program program enchancment:

Natural Language Interfaces (NLI) for Development:

- Code Generation from Specifications: Complete functions constructed from pure language requirements

- Infrastructure as Conversation: Cloud belongings managed by technique of conversational interfaces

- Testing Through Natural Language: Test situations written in plain English and therefore mechanically executed

Business Impact:

- Democratization of software program program enchancment to non-technical stakeholders

- Massive low cost in enchancment cycles for regular functions

- New place emergence: “Language Programmers” who concentrate on NLI enchancment

Essential Tools Ecosystem for 2025-2026

Hugging Face Transformers Evolution:

python

from transformers import pipeline, AutoTokenizer, AutoModel

# Advanced prompt optimization pipeline

prompt_optimizer = pipeline(

"prompt-optimization",

model="huggingface/prompt-optimizer-v2025",

system=0

)

# Multi-model prompt testing

def test_prompt_across_models(prompt, fashions=["gpt-4o", "claude-4", "gemini-2.0"]):

outcomes = {}

for model in fashions:

response = prompt_optimizer(prompt, model=model)

outcomes[model] = {

'output': response,

'quality_score': evaluate_quality(response),

'efficiency_metrics': calculate_efficiency(response)

}

return outcomesDSPy Framework Advances:

- Automatic Prompt Engineering: End-to-end optimization with out information prompt crafting

- Multi-objective Optimization: Balancing excessive high quality, velocity, and therefore worth concurrently

- Domain-specific Modules: Pre-built elements for widespread enterprise utilize situations

LangChain Enterprise Features:

- Production Monitoring: Real-time effectivity monitoring for prompt-based strategies

- A/B Testing Framework: Built-in experimentation for prompt optimization

- Compliance Tools: Automated governance and therefore audit capabilities

Emerging Specialized Tools

PromptLayer Pro: Advanced prompt versioning and therefore collaboration platform with enterprise safety measures.

Weights & Biases Prompts: Comprehensive experiment monitoring and therefore optimization for prompt engineering workflows.

OpenAI Evals 2.0: Sophisticated evaluation framework for measuring prompt effectiveness all through a lot of dimensions.

Market Predictions for 2026

Technology Convergence:

- Integration of prompt engineering with robotic course of automation (RPA)

- Convergence of conversational AI and traditional enterprise intelligence devices

- Emergence of “AI-first” software program architectures constructed spherical pure language interfaces

Industry Adoption Patterns:

- Healthcare: Regulatory-compliant AI assistants for scientific decision help

- Finance: Advanced hazard analysis and therefore compliance monitoring by technique of conversational AI

- Education: Personalized tutoring strategies with adaptive prompt-based finding out

- Manufacturing: Natural language interfaces for difficult industrial automation

Skills Evolution:

- Traditional programmers are together with prompt engineering to their skillsets

- Emergence of “AI Product Managers” specializing in prompt-based product enchancment

- Integration of prompt engineering into typical enterprise roles (promoting, operations, buyer assist)

💡 Pro Tip: The organizations which will lead in 2026 are these investing in auto-prompting capabilities now. Start with straightforward automated optimization strategies and therefore progressively assemble in the direction of completely autonomous prompt administration.

People Also Ask (Auto-Generated)

Q: What is the excellence between prompt engineering and traditional programming in 2025? A: Traditional programming requires particular code instructions and therefore technical expertise, whereas prompt engineering makes utilize of pure language to info AI strategies. In 2025, prompt engineering affords faster implementation (minutes vs. weeks), requires medium expertise ranges, and therefore is driving the shift in the direction of “language-first programming” the place pure language turns into the primary interface for software program program enchancment.

Q: How lots can corporations save with AI prompt engineering in 2025? A: Organizations implementing strategic prompt engineering report up to 50% low cost in content material materials creation time and therefore 340% improve in conversion fees (as seen in e-commerce functions). The world agentic AI market, intently pushed by prompt engineering, is valued at $7.55 billion in 2025, indicating large cost-saving potential all through industries.

Q: What are mega-prompts, and therefore why are they important? A: Mega-prompts are longer, context-rich instructions (500+ phrases) that current detailed constraints, examples, and therefore requirements to AI fashions. Unlike major prompts, they lead to further nuanced and therefore detailed responses with significantly increased first-pass excessive high quality, decreasing revision cycles by 60-80% in expert functions.

Q: Which AI fashions work biggest for superior prompt engineering methods? A: GPT-4o, Claude 4, and therefore Gemini 2.0 are the principle fashions for superior methods like mega-prompts, adaptive prompting, and therefore multimodal integration. These fashions present superior context understanding, increased instruction following, and therefore help for difficult prompt architectures vital for expert functions.

Q: How do I defend my AI strategies from prompt injection assaults? A: Implement multi-layer security, collectively with runtime monitoring, semantic analysis, behavioral pattern detection, and therefore response validation. Use frameworks like Gandalf-style drawback strategies for testing, preserve full audit trails, and therefore deploy regular finding out strategies that adapt to new threats.

Q: What experience do I need to end up to be a prompt engineer in 2025? A: Key experience embrace understanding AI model capabilities, pure language optimization, major programming (Python helpful), information analysis, space expertise in your aim {trade}, and therefore info of security biggest practices. Many professionals are together with prompt engineering to current skillsets considerably than starting from scratch.

Frequently Asked Questions (FAQ)

How prolonged does it take to examine prompt engineering efficiently?

Basic prompt engineering might be found in 2-4 weeks with fixed observe. Professional-level experience, collectively with superior methods like meta-prompting and therefore agentic workflows, normally require 3-6 months of devoted finding out and therefore hands-on experience. The secret’s starting with primary concepts and therefore progressively developing complexity.

What’s the ROI of investing in prompt engineering for corporations?

Companies report vital returns, collectively with a 50% low cost in content material materials creation time, 340% conversion value enhancements, and therefore 60-80% decrease in revision cycles. The exact ROI varies by {trade} and therefore implementation, nonetheless most organizations see optimistic returns inside 30-90 days of deployment.

Can prompt engineering change typical programming?

Prompt engineering enhances considerably than replaces typical programming. While it excels at pure language duties, content material materials period, and therefore AI-human interfaces, typical programming stays vital for system construction, database administration, and therefore deterministic processes. The future contains hybrid approaches combining every skillsets.

What are a very powerful errors to steer clear of in prompt engineering?

Common errors embrace: being too imprecise in instructions, not providing satisfactory context, failing to embrace examples, ignoring questions of safety, not testing prompts systematically, and therefore making an attempt difficult duties with out breaking them into smaller elements. Always start with clear, explicit instructions and therefore iterate primarily primarily based on outcomes.

How do I measure the effectiveness of my prompts?

Key metrics embrace output excessive high quality scores, job completion fees, individual satisfaction scores, revision requirements, processing time, and therefore worth effectivity. Implement A/B testing frameworks to study prompt variations and therefore utilize automated evaluation devices when doable for fixed measurement.

Is prompt engineering a regular career choice for the long term?

Prompt engineering is evolving proper right into a primary expertise considerably than a standalone career. It’s becoming built-in into roles all through promoting, product administration, buyer assist, and therefore technical positions. Learning prompt engineering enhances career prospects all through a lot of industries as AI adoption accelerates.

Conclusion: Mastering the AI-Driven Future

The artificial intelligence panorama of 2025 represents a primary shift in how we work collectively with know-how, treatment points, and therefore create price. The tendencies explored on this whole info—from the explosive progress of agentic AI strategies to the sophistication of mega-prompts and therefore the emergence of auto-prompting—are frequently not merely technological advances; they are — really the developing blocks of a model new monetary and therefore inventive paradigm.

Key Strategic Insights for Success

Embrace the Complexity: The most worthwhile AI implementations in 2025 combine a lot of superior methods. Organizations that grasp the mix of adaptive prompting, multimodal inputs, and therefore agentic workflows are seeing transformational outcomes all through all enterprise metrics.

Security as a Foundation: With good AI power comes good responsibility. The refined adversarial threats of 2025 require equally refined defenses. Organizations that prioritize security and therefore ethical AI practices are developing sustainable aggressive advantages, whereas individuals who don’t are going by means of rising risks.

The Human-AI Collaboration Evolution: The future will not be about AI altering folks—it’s about folks and therefore AI systems working collectively further efficiently than each might alone. Prompt engineering is the language of this collaboration, making it among the useful experience of the final decade.

Continuous Learning Imperative: The tempo of AI growth implies that methods environment friendly instantly may be outdated inside months. Organizations and therefore people who assemble regular finding out and therefore adaptation into their AI strategies will thrive on this shortly evolving panorama.

The Competitive Advantage of Early Adoption

Companies implementing superior prompt engineering methods instantly are establishing vital aggressive moats. The effectivity good factors, excessive high quality enhancements, and therefore innovation capabilities provided by refined AI strategies are creating market advantages that may most likely be robust for opponents to overcome.

The $7.55 billion agentic AI market, projected to reach $199.05 billion by 2034, represents further than merely progress—it represents a primary transformation of how work will obtain executed. Organizations that grasp these utilized sciences early will kind the markets of tomorrow.

Your Next Steps: From Knowledge to Action

Understanding these tendencies is simply the begin. The precise price comes from implementation and therefore experimentation. Here’s your roadmap for getting started:

- Start with Mega-Prompts: Begin upgrading your current AI interactions with further detailed, context-rich prompts

- Experiment with Multimodal Inputs: Test combining textual content material, photographs, and therefore totally different information varieties in your AI workflows

- Implement Security Measures: Build sturdy defenses in the direction of adversarial prompting from day one

- Explore Agentic Workflows: Design AI strategies which will take care of difficult, multi-step processes

- Invest in Learning: Dedicate time to mastering the frameworks and therefore devices which will define the next wave of AI innovation

Final Call to Action

The AI revolution of 2025 will not be coming—it’s proper right here. The organizations, professionals, and therefore innovators who embrace these superior prompt engineering methods instantly could be the leaders of tomorrow’s AI-driven economic system.

Don’t merely study these tendencies—experience them. Start with the templates and therefore methods provided on this info. Test the code examples. Experiment with the frameworks. Build your private agentic AI strategies. The future belongs to people who act on info, not merely buy it.

The question will not be whether or not but not AI will transform your {trade}—it’s whether or not but not chances are you’ll be most important that transformation but scrambling to catch up. The devices, methods, and therefore strategies outlined on this info provide you all of the items you need to be a frontrunner inside the AI-driven future.

Take movement instantly. Your future self will thanks.

References and therefore Citations

- Grand View Research. (2024). “Agentic AI Market Size, Share & Trends Analysis Report 2025-2034.” Retrieved from [Market Research Reports]

- OpenAI. (2025). “GPT-4o Technical Documentation and Best Practices.” OpenAI Developer Documentation.

- Stanford University. (2024). “DSPy: Programming—not prompting—Foundation Models.” arXiv:2310.03714

- Anthropic. (2025). “Claude 4 Model Card and Safety Documentation.” Anthropic AI Safety Research.

- Google DeepMind. (2024). “Gemini 2.0: Advanced Multimodal AI Capabilities.” Nature Machine Intelligence.

- MIT Technology Review. (2025). “The State of Enterprise AI: Adoption, Challenges, and Opportunities.”

- Gartner Research. (2024). “AI Software Market Forecast: 2025-2030.” Gartner Technology Reports.

- Hugging Face Research. (2024). “Advances in Automated Prompt Optimization.” Transformers Library Documentation.

- LangChain Corporation. (2025). “Production AI Systems: Monitoring and Optimization Best Practices.”

- NIST AI Risk Management Framework. (2024). “Guidelines for Secure AI System Implementation.” NIST Special Publication 800-218.

- Harvard Business Review. (2025). “The ROI of AI: Measuring Success in Prompt Engineering Implementations.”

- ACM Computing Surveys. (2024). “A Comprehensive Survey of Prompt Engineering Techniques and Applications.”

External Resources:

- OpenAI Documentation – Official API documentation and therefore biggest practices

- Hugging Face Model Hub – Open-source AI fashions and therefore devices

- arXiv.org – Latest AI evaluation papers and therefore developments

- MIT Technology Review AI Section – Industry analysis and therefore tendencies

- Gartner AI Research – Market intelligence and therefore forecasts

- Anthropic AI Safety Research – Safety and therefore alignment evaluation

- Stanford HAI – Human-centered AI evaluation and therefore insights

- Google AI Research – Technical breakthroughs and therefore functions

This info represents the current state of AI tendencies as of August 2025. Given the quick tempo of AI enchancment, readers are impressed to sustain to date with the latest evaluation and therefore {trade} developments.