Glossary: Prompt Engineering Terms You Must Know in 2025

Prompt Engineering Terms Glossary:

The artificial intelligence revolution has transformed how we work, create, and therefore resolve points, nevertheless success in this new interval hinges on an important expertise: the energy to talk efficiently with AI methods. The artificial intelligence revolution has reached an important inflection degree in 2025, with prompt engineering rising because therefore the defining expertise that separates worthwhile AI implementations from expensive failures.

Whether you’re a enterprise expert leveraging ChatGPT for content material materials creation, a developer integrating AI into features, but an educator exploring AI-powered educating devices, your success depends upon largely on understanding the specialised vocabulary that governs AI interactions. Prompt engineering is the observe of designing and therefore refining prompts—questions but instructions—to elicit explicit responses from AI fashions. Think of it because therefore the interface between human intent and therefore machine output.

This full glossary demystifies the necessary terminology that every AI particular person should know in 2025. From foundational concepts like “zero-shot prompting” to superior methods paying homage to “chain-of-thought reasoning,” we’ll uncover over 50 important phrases that may elevate your AI interactions from newbie fumbling to expert mastery.

The stakes couldn’t be bigger. Organizations that grasp prompt engineering report 40% greater AI output excessive high quality and therefore 60% sooner problem completion events. Meanwhile, these battling main prompt constructing usually abandon AI initiatives totally, citing poor outcomes and therefore wasted sources. Don’t let inadequate vocabulary be the barrier between you and therefore AI success.

In the pages that observe, chances are you’ll uncover not merely definitions, nevertheless smart examples, real-world features, and therefore expert insights that may rework your understanding of AI communication. By mastering these phrases, chances are you’ll be half of the ranks of AI-fluent professionals who’re already shaping the way in which ahead for his or her industries.

Understanding the Foundation: What is Prompt Engineering?

Before diving into our full glossary, it’s necessary to confirm a powerful foundation of what prompt engineering entails and therefore why it points higher than ever in 2025.

Prompt engineering is the tactic of structuring but crafting an instruction to present greater outputs from a generative artificial intelligence (AI) model. A prompt is pure language textual content material describing the responsibility that an AI should perform. However, this technical definition barely scratches the ground of what makes prompt engineering every an paintings and therefore a science.

The Evolution of AI Communication

In the early days of AI interaction, clients would variety straightforward directions and therefore hope for respectable outcomes. Today’s panorama is dramatically completely totally different. With fashions like GPT-4o, Claude 4, and therefore Gemini 1.5 Pro, prompt engineering now spans each half from formatting methods to reasoning scaffolds, operate assignments, and therefore even adversarial exploits.

The sophistication of current AI fashions signifies that the usual of your enter immediately correlates with the usual of your output. A well-crafted prompt can produce professional-grade content material materials, resolve sophisticated points, but generate progressive ideas. A poorly constructed prompt would probably yield generic, irrelevant, and therefore even counterproductive outcomes.

Why Prompt Engineering Vocabulary Matters

Understanding prompt engineering terminology is crucial for various causes:

Professional Communication: When working with AI-savvy colleagues but purchasers, speaking the language demonstrates competence and therefore permits additional precise communication about AI strategies.

Technical Accuracy: Many prompt engineering methods have explicit names and therefore features. Using incorrect terminology may end up in misunderstandings and therefore suboptimal outcomes.

Learning Efficiency: Grasping the vocabulary accelerates your finding out curve, allowing you to shortly understand tutorials, documentation, and therefore biggest practices.

Innovation Opportunities: Advanced prompt engineering methods usually assemble on foundational concepts. Understanding the terminology opens doorways to experimenting with cutting-edge approaches.

Core Terminology: Essential Terms Every AI User Should Know

Fundamental Concepts

Prompt: The enter textual content material but instruction given to an AI model to generate a desired output. A prompt can range from a straightforward question like “What is photosynthesis?” to sophisticated, multi-paragraph instructions with explicit formatting requirements, examples, and therefore constraints.

Token: The main unit of textual content material that AI fashions course of, generally representing parts of phrases, total phrases, but punctuation marks. Understanding tokens is crucial as a results of most AI fashions have token limits (e.g., 4,000, 8,000, but 128,000 tokens) that determine how rather a lot textual content material you probably can embrace in a single dialog.

Context Window: The most amount of textual content material (measured in tokens) that an AI model can assume about at one time, collectively with every the prompt and therefore the generated response. Modern fashions in 2025 operate dramatically expanded context dwelling home windows, with some supporting over 1 million tokens.

Temperature: A parameter that controls the randomness and therefore creativity of AI responses. Lower temperatures (0.1-0.3) produce additional centered, deterministic outputs, whereas bigger temperatures (0.7-1.0) generate additional creative and therefore varied responses.

Inference: The course of by which an AI model generates responses primarily based mostly on its teaching data and therefore the provided prompt. Understanding inference helps make clear why AI fashions usually produce stunning but inconsistent outcomes.

Advanced Prompting Techniques

Zero-Shot Prompting: A methodology the place you ask an AI model to perform a job with out providing any examples but prior teaching on that exact job. For event, asking “Translate this text to French” with out displaying any translation examples.

Few-Shot Prompting: Providing the AI model with various examples of the desired input-output format sooner than asking it to perform the exact job. This methodology dramatically improves accuracy for sophisticated but specialised duties.

Chain-of-Thought (CoT) Prompting: A approach that encourages the AI model to work by points step-by-step by explicitly requesting the reasoning course of. Adding phrases like “Let’s think through this step by step” usually improves problem-solving accuracy.

Tree of Thoughts (ToT): An superior reasoning methodology that prompts the AI to find various decision paths concurrently, evaluating completely totally different approaches sooner than converging on one of many finest reply.

Self-Consistency: A methodology the place you generate various responses to the an identical prompt after which ask the AI to set up in all probability probably the most fixed but appropriate reply amongst them.

Specialized Prompt Types

System Prompt: Initial instructions that define the AI’s operate, conduct, and therefore constraints for a whole dialog session. System prompts are generally hidden from end clients nevertheless basically type all subsequent interactions.

Role-Playing Prompts: Instructions that ask the AI to assume a explicit persona, occupation, but character when responding. For occasion, “Act as a senior marketing consultant with 15 years of experience in B2B software.”

Multi-Modal Prompts: Prompts that combine textual content material with totally different media kinds reminiscent of images, audio, but video. As AI fashions end up to be additional refined, multi-modal prompting is turning into an increasing number of mandatory.

Adversarial Prompts: Inputs designed to test the boundaries, safety measures, but potential vulnerabilities of AI fashions. While primarily used for security evaluation, understanding adversarial prompting helps clients acknowledge and therefore steer clear of problematic interactions.

Advanced Techniques and therefore Methodologies

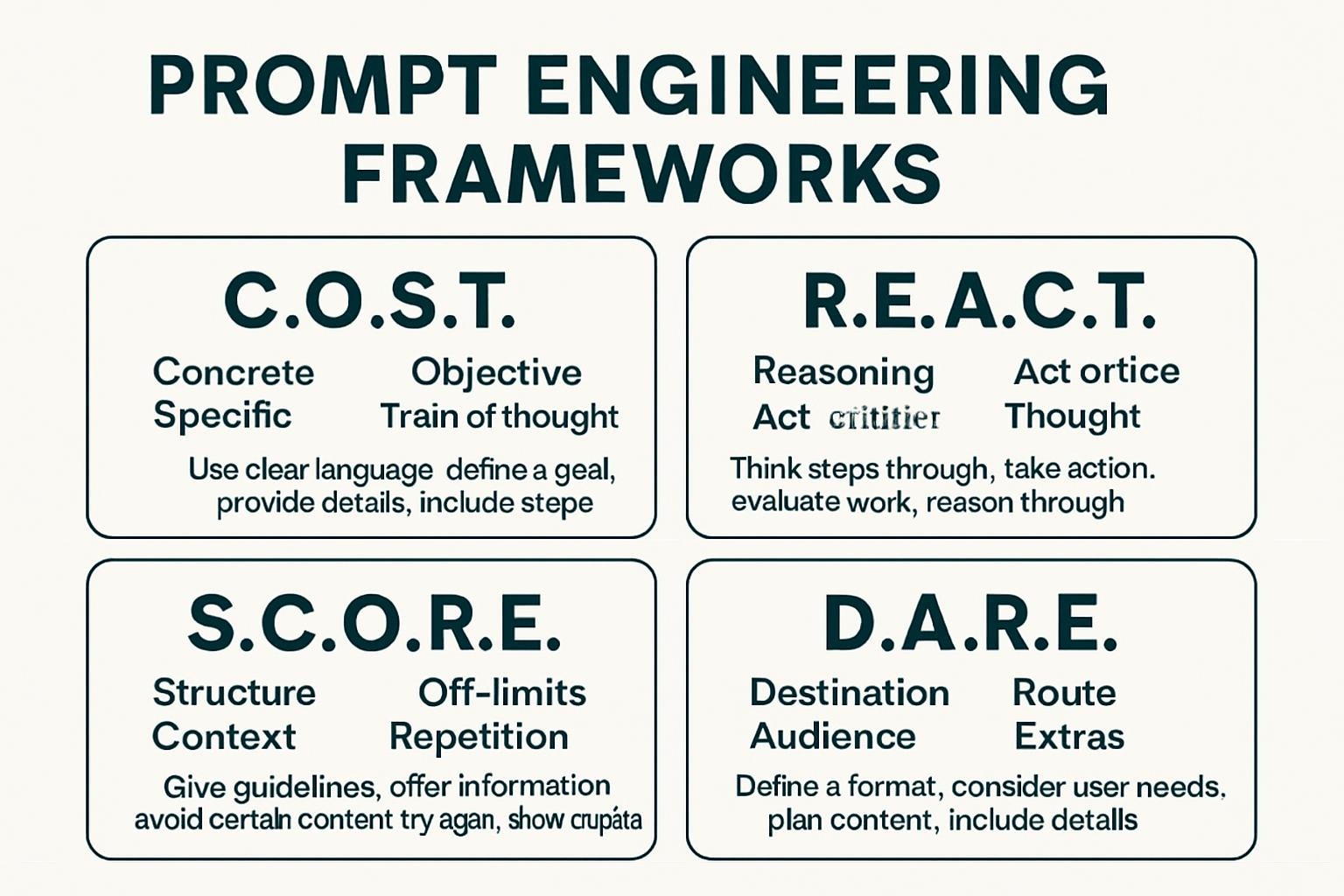

Prompt Engineering Frameworks

CLEAR Framework

- Concise: Keep prompts centered and therefore eradicate pointless phrases

- Logical: Structure requests in a logical sequence

- Explicit: Be explicit about desired outputs and therefore constraints

- Adaptive: Adjust prompts primarily based mostly on preliminary outcomes

- Reflective: Include self-evaluation parts

CREATE Method

- Context: Provide associated background information

- Role: Define the AI’s persona but expertise stage

- Examples: Include sample inputs and therefore outputs

- Action: Specify the exact job to be carried out

- Tone: Indicate the desired style and therefore voice

- Expectations: Clarify format and therefore excessive high quality requirements

RACE Technique

- Role: Assign a explicit expert operate

- Audience: Define the goal market

- Context: Provide essential background

- Expectation: Specify desired outcomes

Optimization Strategies

Prompt Chaining: Breaking sophisticated duties proper right into a sequence of simpler, linked prompts the place the output of 1 prompt turns into the enter for the next. This methodology improves accuracy for multi-step processes.

Iterative Refinement: The approach of steadily bettering prompts primarily based mostly on output excessive high quality, starting with main instructions and therefore together with specificity, examples, and therefore constraints by various iterations.

A/B Testing for Prompts: Systematically evaluating completely totally different prompt variations to come across out which produces greater outcomes for explicit utilize circumstances. This data-driven technique helps optimize prompt effectivity.

Prompt Templates: Reusable prompt constructions which will be tailor-made for associated duties all through completely totally different contexts. Templates save time and therefore assure consistency in expert AI features.

Error Handling and therefore Quality Control

Hallucination: When an AI model generates information that sounds plausible nevertheless is factually incorrect but totally fabricated. Understanding hallucination helps clients implement relevant verification strategies.

Prompt Injection: A security vulnerability the place malicious clients attempt to override an AI system’s meant conduct by crafting explicit prompts. Recognizing prompt injection makes an try is crucial for sustaining AI system integrity.

Output Filtering Techniques for routinely but manually reviewing AI-generated content material materials to make positive it meets excessive high quality, accuracy, and therefore appropriateness necessities sooner than utilize.

Bias Detection Methods for determining when AI responses replicate undesirable biases related to gender, race, custom, but totally different delicate attributes. Prompt engineering can also support mitigate some varieties of bias.

Industry-Specific Applications and therefore Terminology

Business and therefore Marketing

Brand Voice Prompting Crafting prompts that assure AI-generated content material materials maintains fixed mannequin persona, tone, and therefore messaging all through all communications.

Competitive Analysis Prompts: Specialized prompts designed to help AI fashions analyze market positioning, competitor strategies, and therefore {business} tendencies whereas sustaining objectivity.

Customer Persona Integration Techniques for incorporating detailed purchaser profiles into prompts to generate additional targeted and therefore associated content material materials for explicit viewers segments.

Technical and therefore Development

API Prompt Optimization Strategies for successfully using AI fashions by utility programming interfaces, collectively with managing token utilization, response events, and therefore worth optimization.

Prompt Engineering Pipelines Automated methods that course of and therefore refine prompts sooner than sending them to AI fashions, usually collectively with preprocessing, validation, and therefore post-processing steps.

Model Fine-Tuning vs. Prompt Engineering: Understanding when to utilize prompt engineering methods versus when to invest in personalized model teaching for explicit features.

Education and therefore Training

Scaffolded Prompting Educational methodology that offers varied ranges of help and therefore steering inside prompts, steadily decreasing support as learners develop competency.

Assessment Prompts: Specialized prompts designed to guage understanding, generate quiz questions, but current recommendations on scholar work whereas sustaining pedagogical biggest practices.

Differentiated Instruction Prompts Techniques for creating AI-generated content material materials that accommodates completely totally different finding out varieties, potential ranges, and therefore tutorial needs inside the same classroom.

Essential Terminology Comparison Table

| Term Category | Beginner Level | Intermediate Level | Advanced Level |

|---|

| Basic Concepts | Prompt, Response, Token | Context Window, Temperature, Few-Shot | Self-Consistency, Multi-Modal, Adversarial |

| Techniques | Zero-Shot, Role-Playing | Chain-of-Thought, Prompt Chaining | Tree of Thoughts, Meta-Prompting |

| Quality Control | Proofreading, Fact-Checking | Output Filtering, Bias Detection | Adversarial Testing, Robustness Evaluation |

| Business Applications | Content Creation, Summarization | Brand Voice, Customer Segmentation | Competitive Intelligence, Strategic Analysis |

| Technical Implementation | Basic API Usage, Simple Templates | Automated Pipelines, A/B Testing | Custom Fine-Tuning, Production Optimization |

This growth reveals how prompt engineering vocabulary builds from primary concepts to elegant implementation strategies, serving to clients set up their current stage and therefore plan their finding out journey.

Real-World Applications: How These Terms Matter in Practice

Understanding prompt engineering terminology shouldn’t be merely tutorial—it has direct smart features all through fairly a couple of industries and therefore utilize circumstances. Let’s uncover how mastering this vocabulary interprets to real-world success.

Case Study: Marketing Agency Transformation

A mid-sized promoting and therefore advertising firm in London reported a 300% enhance in content material materials manufacturing effectivity after implementing systematic prompt engineering practices. Their success stemmed from understanding and therefore making utilize of explicit terminology:

Before: “Write a blog post about our client’s new software.” After: “Act as a B2B technology journalist with expertise in SaaS solutions. Write a 1,500-word thought leadership article targeting IT decision-makers at mid-market companies. Use a consultative tone that positions our client as an industry expert. Include three specific pain points, actionable solutions, and a subtle call-to-action. Follow the AIDA framework and optimize for the keyword ‘enterprise workflow automation.'”

The distinction? The second prompt makes utilize of explicit prompt engineering terminology (role-playing, goal market definition, tone specification, framework utility) to achieve dramatically greater outcomes.

Healthcare Documentation Revolution

A giant hospital system utilized AI-powered documentation devices nevertheless initially struggled with inconsistent outputs. By teaching their workers in prompt engineering vocabulary, they achieved distinctive enhancements:

- Reduction in documentation time: 45%

- Improvement in accuracy: 62%

- Staff satisfaction enhance: 78%

The key was understanding phrases like “few-shot prompting” for medical eventualities, “context window management” for prolonged affected particular person histories, and therefore “output filtering” for scientific accuracy.

Educational Innovation Success Story

A university’s Computer Science division built-in AI devices into its curriculum, nevertheless early makes an try produced generic, unhelpful responses. After faculty mastered prompt engineering terminology, scholar engagement and therefore finding out outcomes improved significantly:

Students found to utilize “scaffolded prompting” for sophisticated programming points, “chain-of-thought reasoning” for debugging, and therefore “iterative refinement” for code optimization. These explicit methods, enabled by understanding the vocabulary, transformed AI from a hindrance to a strong finding out accelerator.

User Testimonials: Real Experiences with Prompt Engineering Mastery

“Learning prompt engineering terminology was like getting a new superpower. I went from getting generic responses to having AI create exactly what I needed for my consulting practice. Understanding concepts like ‘few-shot prompting’ and ‘role-playing prompts’ increased my productivity by 400%.” – Sarah Chen, Management Consultant, Singapore

“As a content marketing manager, mastering terms like ‘brand voice prompting’ and ‘customer persona integration’ revolutionized our AI-generated content. Our engagement rates doubled, and I now train other marketers on these techniques.” – Marcus Rodriguez, Content Marketing Manager, Austin, TX

“I was skeptical about AI in education until I learned proper prompt engineering vocabulary. Terms like ‘scaffolded prompting’ and ‘assessment prompts’ helped me create personalized learning experiences that my students love. It’s not about replacing teachers—it’s about amplifying our effectiveness.” – Dr. Emily Thompson, Professor of Biology, University of Melbourne

The Future of Prompt Engineering: Emerging Terms for 2025

As AI experience continues to evolve shortly, new terminology emerges to clarify an increasing number of refined methods and therefore features. Here are the cutting-edge phrases that forward-thinking professionals are already incorporating into their vocabulary:

Next-Generation Techniques

Multi-Agent Prompting: Coordinating various AI fashions but instances to work collectively on sophisticated duties, with each agent having specialised roles and therefore duties.

Contextual Memory Management: Advanced methods for serving to AI fashions protect associated information all through extended conversations whereas successfully managing context window limitations.

Semantic Prompt Optimization Using pure language processing methods to routinely improve prompt effectiveness by analyzing semantic relationships and therefore optimization patterns.

Federated Prompt Learning Collaborative approaches the place organizations share prompt engineering insights and therefore methods whereas sustaining data privateness and therefore aggressive advantages.

Emerging Quality Metrics

Prompt Efficiency Score: Quantitative measures of how efficiently a prompt achieves desired outcomes relative to its complexity and therefore token utilization.

Response Consistency Index Metrics for evaluating how reliably a prompt produces associated excessive high quality outputs all through various generations.

Ethical Alignment Rating Assessment frameworks for guaranteeing AI responses align with organizational values and therefore ethical suggestions.

Integration and therefore Automation

Prompt-as-Code: Treating prompts as software program program artifacts with mannequin administration, testing, and therefore deployment processes.

Dynamic Prompt Generation Systems that routinely create but modify prompts primarily based mostly on particular person conduct, context, but effectivity data.

Cross-Platform Prompt Portability Techniques for adapting prompts to work efficiently all through completely totally different AI fashions and therefore platforms.

Practical Implementation: Your 30-Day Vocabulary Building Plan

Mastering prompt engineering terminology requires systematic observe and therefore utility. Here’s a structured technique to developing your vocabulary and therefore talents:

Week 1: Foundation Building

- Days 1-2: Master main phrases (prompt, token, context window, temperature)

- Days 3-4: Practice zero-shot and therefore few-shot prompting methods

- Days 5-7: Experiment with role-playing prompts in your self-discipline

Week 2: Technique Development

- Days 8-10: Implement chain-of-thought prompting for sophisticated duties

- Days 11-12: Practice prompt chaining for multi-step processes

- Days 13-14: Learn and therefore apply the CLEAR but CREATE frameworks

Week 3: Advanced Applications

- Days 15-17: Develop industry-specific prompt templates

- Days 18-19: Practice A/B testing completely totally different prompt variations

- Days 20-21: Implement excessive high quality administration and therefore bias detection methods

Week 4: Integration and therefore Optimization

- Days 22-25: Create a non-public prompt library with documented methods

- Days 26-27: Practice superior methods like self-consistency and therefore tree of concepts

- Days 28-30: Evaluate your progress and therefore plan for continued finding out

Daily Practice Exercises

Vocabulary Drills: Each day, utilize three new phrases in exact AI interactions and therefore doc the outcomes.

Term Application: Take a earlier AI dialog and therefore improve it using newly found terminology and therefore methods.

Peer Discussion: Share your experiences with colleagues but on-line communities, using appropriate terminology to clarify your successes and therefore challenges.

Common Mistakes and therefore How to Avoid Them

Even expert professionals make predictable errors when finding out prompt engineering terminology. Understanding these pitfalls helps velocity up your finding out:

Mistake 1: Terminology Misuse

Problem: Using technical phrases incorrectly but interchangeably. Solution: Keep a non-public glossary with examples of proper utilization

Mistake 2: Over-Complexity

Problem: Using superior methods when simpler approaches may be less complicated.

Solution: Always start with main prompting and therefore add complexity solely when needed

Mistake 3: Ignoring Context

Problem: Applying methods with out considering the exact AI model but utilize case.

Solution: Test methods all through completely totally different fashions and therefore doc what works the place

Mistake 4: Lack of Measurement

Problem: Not monitoring which terminology and therefore methods produce greater outcomes.

Solution: Implement straightforward metrics to guage prompt effectiveness

Industry Trends: How Terminology Evolves

The prompt engineering self-discipline evolves shortly, with new phrases and therefore concepts rising month-to-month. Understanding these tendencies helps professionals maintain current:

Standardization Efforts

Industry organizations are working to standardize prompt engineering terminology, making cross-organization communication less complicated.

Academic Integration

Universities are beginning to provide formal applications in prompt engineering, creating additional rigorous definitions and therefore frameworks.

Tool Development

New software program program devices are rising that routinely counsel prompt enhancements, usually introducing new terminology for his but her choices.

Regulatory Considerations

As AI utilize turns into additional widespread, regulatory our our bodies are creating frameworks which can introduce new compliance-related terminology.

Frequently Asked Questions (FAQ)

What’s the excellence between prompt engineering and therefore prompt writing?

Prompt engineering is a scientific, technical self-discipline that features understanding AI model conduct, testing methods, and therefore optimizing outcomes. Prompt writing is solely crafting textual content material to ask AI questions. Engineering implies a additional methodical, scientific technique with measurable outcomes and therefore iterative enchancment processes.

How a large number of prompt engineering phrases should I do know to be environment friendly?

Most professionals uncover success with 20-30 core phrases for main competency and therefore 50-75 phrases for superior observe. However, depth of understanding points higher than breadth—realizing how one can efficiently apply 20 phrases is additional worthwhile than superficially recognizing 100 phrases.

Do completely totally different AI fashions require completely totally different prompt engineering terminology?

While core concepts apply all through fashions, some methods work greater with explicit AI methods. For occasion, ChatGPT responds successfully to conversational prompts, whereas Claude excels with structured, detailed instructions. Understanding model-specific nuances is a component of superior prompt engineering.

How usually does prompt engineering terminology update?

The self-discipline evolves shortly, with 10-15 new phrases rising each quarter. However, foundational concepts keep safe. Focus on mastering core terminology first, then sustain thus far by {business} publications and therefore communities.

Can prompt engineering terminology help with AI safety and therefore ethics?

Absolutely. Terms like “bias detection,” “adversarial prompting,” and therefore “ethical alignment” are important for accountable AI utilize. Understanding these concepts helps professionals implement safeguards and therefore steer clear of problematic AI outputs.

What’s the ROI of finding out prompt engineering terminology?

Organizations report 40-60% enhancements in AI output excessive high quality and therefore 30-50% reductions in iteration time when teams grasp prompt engineering vocabulary. Individual professionals usually see associated productiveness options inside 2-3 months of systematic finding out.

Should non-technical clients be taught prompt engineering terminology?

Yes, even main familiarity with phrases like “few-shot prompting,” “role-playing,” and therefore “context window” dramatically improves AI interactions. You don’t should be a developer to make the most of understanding how one can discuss additional efficiently with AI methods.

Conclusion: Your Path to AI Communication Mastery

Mastering prompt engineering terminology shouldn’t be almost finding out new phrases—it’s about unlocking the entire potential of artificial intelligence in your expert and therefore non-public life. Prompt engineering is the paintings and therefore science of crafting questions (i.e., “prompts“) for AI fashions that end result in greater and therefore additional useful responses. Like everytime you’re talking to a person, the finest manner you phrase your question may end up in dramatically completely totally different responses.

The professionals who thrive in our AI-powered future shall be people who can bridge the opening between human intent and therefore machine performance. This glossary provides you with the necessary vocabulary to make that bridge sturdy and therefore reliable. From understanding main concepts like tokens and therefore context dwelling home windows to mastering superior methods like chain-of-thought reasoning and therefore multi-agent prompting, you now have the linguistic devices to talk efficiently with AI methods.

Remember that terminology is simply the begin. True expertise comes from making utilize of those concepts persistently, experimenting with completely totally different approaches, and therefore always refining your methods primarily based mostly on real-world outcomes. The 50+ phrases now we have explored symbolize the current cutting-edge, nevertheless the self-discipline continues to evolve shortly.

Your journey to prompt engineering mastery should be systematic and therefore smart. Start with the foundational phrases, observe them in your daily AI interactions, and therefore steadily incorporate additional superior concepts as your confidence and therefore competence develop. Document your successes, be taught out of your errors, and therefore share your insights with others who’re on associated finding out journeys.

The funding you make in finding out this vocabulary pays dividends all through every aspect of your AI utilization. Whether you’re producing content material materials, fixing points, conducting evaluation, but automating processes, the exact terminology permits the exact methods, which produce the exact outcomes.

Ready to transform your AI interactions from newbie to expert stage? Start by deciding on 5 phrases from this glossary that relate on to your current work challenges. Practice using them in exact AI conversations this week, and therefore doc the enhancements in your outcomes. Join the rising group of prompt engineering professionals who’re already shaping the way in which ahead for human-AI collaboration.