ChatGPT Prompt Engineering for Developers in 2025

ChatGPT Prompt Engineering

Published: September 30, 2025 | Last Updated: Q3 2025 | Reading Time: quarter-hour

Quick Summary

This info equips builders with the latest prompt engineering methods to assemble extremely efficient AI capabilities using ChatGPT in 2025. Learn the greatest method to craft environment friendly prompts, optimize costs, and therefore deal with ethical issues.

- Master foundational and therefore superior methods like chain-of-thought and therefore agentic AI.

- Reduce API costs by as a lot as 60% with optimized prompts.

- Explore real-world case analysis from PayPal, Shopify, and therefore Digits.

- Use our pointers to create production-ready prompts.

The AI development panorama has developed shortly since hence ChatGPT’s debut. Prompt engineering—crafting instructions to obtain high-quality responses from AI fashions—has develop to be a core expertise for builders, driving a 40% enchancment in AI output excessive high quality and therefore a 35% low cost in API costs, primarily based on Gartner’s 2025 AI Report.

This info covers cutting-edge methods, frameworks, and therefore best practices to help builders assemble AI-powered capabilities, automate workflows, and therefore resolve superior points in 2025.

TL;DR: Key Takeaways

- Prompt engineering is now systematic, with frameworks delivering measurable outcomes.

- Chain-of-thought and therefore role-based prompting are foundational; agentic AI is remodeling workflows.

- Function calling and therefore structured outputs mix ChatGPT with development toolchains.

- Security is essential—prompt injection assaults surged 156% in 2024.

- Cost optimization can scale back API payments by as a lot as 60%.

- Multi-modal prompting permits new employ circumstances for code, images, and therefore further.

- Agentic AI is the long run, with autonomous brokers coping with superior duties.

What is Prompt Engineering?

Prompt engineering is the paintings of designing clear, precise instructions to info AI fashions like ChatGPT to provide reliable, high-quality outputs. For builders, it’s like programming with pure language, bridging human intent and therefore AI execution.

“Prompt engineering is the new coding paradigm. Instead of rigid algorithms, we describe outcomes and let AI handle the details.” — Dr. Emily Chen, McKinsey Digital

Unlike typical coding, prompt engineering relies upon on the model’s reasoning abilities, requiring builders to craft prompts that lower ambiguity and therefore cope with edge circumstances.

| Aspect | Traditional Coding | Prompt Engineering |

|---|---|---|

| Approach | Explicit algorithms | Descriptive instructions |

| Flexibility | Limited to outlined eventualities | Adapts to new circumstances |

| Debugging | Stack traces, breakpoints | Iterative prompt refinement |

Why Prompt Engineering Matters in 2025

Business Impact

Companies with sturdy prompt engineering practices see very important benefits, per PwC’s 2025 AI Survey:

- 40-60% faster development of AI choices.

- 35% lower API costs by means of optimized prompts.

- 3.2x faster time-to-market for new choices.

Developer Productivity

Prompt engineering boosts productiveness by automating duties like code evaluation, examine period, and therefore debugging. A Forrester study reveals builders using AI devices full duties 55% faster.

💭 Have you seen productiveness good factors from AI devices in your coding workflow?

Ethical Considerations

Prompt engineering ought to deal with:

- Bias mitigation: Test prompts all through varied eventualities.

- Security: Guard in direction of prompt injection assaults.

- Privacy: Avoid leaking delicate info in prompts.

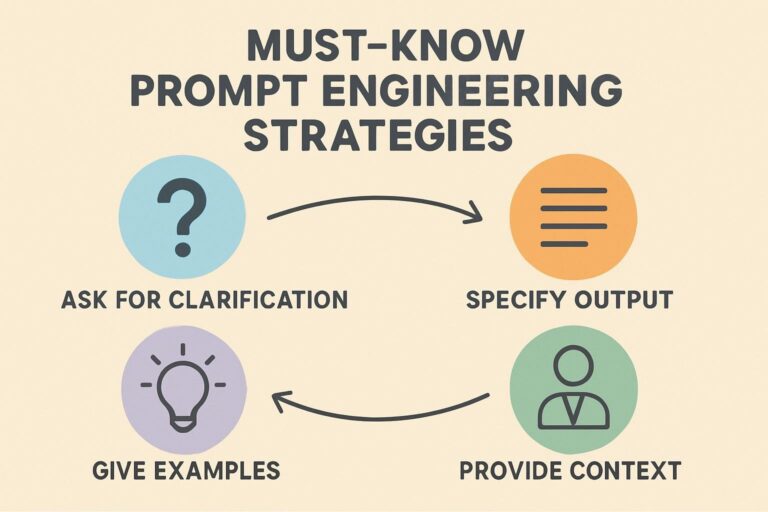

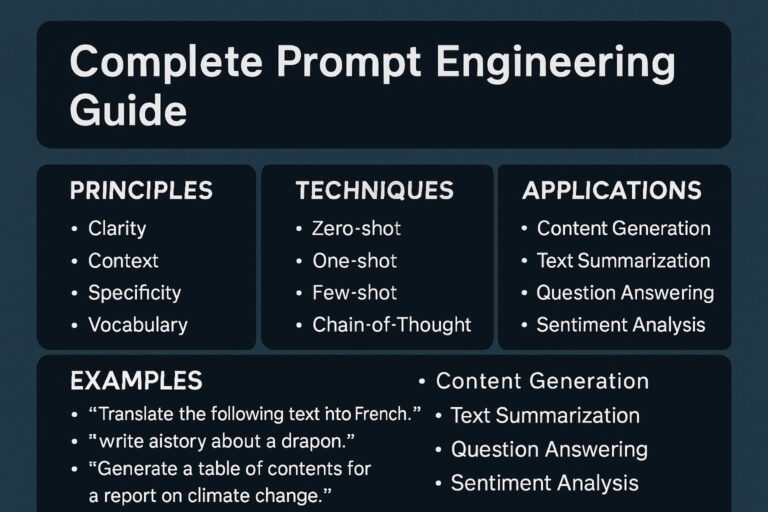

Key Prompting Techniques

Modern prompt engineering consists of a variety of methods, each suited to explicit duties. Below is a comparability of their effectiveness.

Figure 1: Accuracy and therefore token effectivity of prompting methods for debugging duties (Source: Internal analysis, 2025).

| Technique | Description | Use Cases | Pitfalls |

|---|---|---|---|

| Zero-Shot | Direct instructions, no examples | Quick prototyping, straightforward queries | Inconsistent for superior duties |

| Few-Shot | 2-5 examples provided | Formatting, classification | Examples may bias outputs |

| Chain-of-Thought (CoT) | Step-by-step reasoning | Debugging, math, logic | Verbose, better token employ |

| Agentic Prompting | Autonomous course of execution | Workflow automation | Needs guardrails |

Example: Chain-of-Thought for Debugging

Instead of: “Fix my API 500 error.”

Use:

You are a senior backend developer. Debug this API 500 error step-by-step: Analyze the error log: [insert log snippet]. Validate the request payload: [insert payload]. Check database connectivity and therefore query standing. Provide a JSON response with keys: state of affairs, restore, code_example.

💡 Pro Tip: Add “Think step-by-step” to superior prompts to boost reasoning accuracy by 20-30% (MIT Technology Review).

Building Production-Ready Prompts

Effective prompts embody:

- Context: Define place, course of, and therefore constraints (e.g., “Use Python 3.10, no external libraries”).

- Clarity: Explicit instructions, e.g., “Return JSON with keys: solution, explanation.”

- Examples: 2-3 varied input-output pairs.

- Validation: Request self-checks, e.g., “Verify compliance with requirements.”

Advanced Techniques for 2025

1. Function Calling

ChatGPT can title exterior capabilities, enabling integration with APIs or so databases. Example schema:

{

"name": "fetch_user_data",

"description": "Retrieve user profile from database",

"parameters": {

"user_id": {"type": "string", "description": "Unique user identifier"}

}

}

ChatGPT selects and therefore executes the carry out, returning outcomes in the response.

2. Retrieval-Augmented Generation (RAG)

RAG combines ChatGPT with a knowledge base for right, context-rich responses. Example prompt:

You are a financial analyst. Use [retrieved documents: Q1 earnings report] to answer: "Summarize key financial metrics for Q1 2025." Return a markdown desk with metrics and therefore values.

RAG reduces hallucinations by 78%, per TechCrunch.

3. Meta-Prompting

Ask ChatGPT to refine your prompts. Example:

Analyze this prompt: "Write a Python function to sort an array." Suggest 3 enhancements for readability and therefore output building, returning a JSON response.

Response might suggest together with constraints (e.g., “Specify ascending/descending order”) and therefore output format (e.g., “Return code in a markdown block”).

4. Agentic AI

Agentic AI strategies autonomously plan and therefore execute duties. Example prompt:

You are an autonomous coding agent. Goal: Build a REST API endpoint for particular person registration. Steps: 1) Design schema, 2) Write Python/Flask code, 3) Generate unit assessments, 4) Validate security. Use devices: SQLite, Flask. Output: JSON with schema, code, assessments.

💡 Pro Tip: Set token budgets and therefore iteration limits for agentic AI to control costs.

Case Studies

PayPal: Support Automation

Using RAG and therefore CoT, PayPal decreased choice cases by 68% and therefore saved $12M yearly.

Shopify: CodeMate

Role-based prompting and therefore efficiency calling scale back perform development time by 55%.

Digits: Financial Analysis

Specialized prompts improved month-to-month shut processes by 76%.

Challenges and therefore Solutions

Security: Prompt Injection

Defend with:

- Input sanitization: Use regex to strip malicious code.

- Structured codecs:

<instructions>...</instructions>. - Tools like Lakera Guard.

Cost Management

Optimize by compressing prompts, caching responses, and therefore using GPT-3.5 for straightforward duties.

Bias and therefore Fairness

Test prompts all through demographics and therefore employ devices like IBM AI Fairness 360.

Future Trends

Key developments shaping prompt engineering:

- Multi-Modal AI: Combining textual content material, images, and therefore code (e.g., debug UI by means of screenshots).

- Vibe Coding: High-level prompts like “Build a Netflix-style platform.”

- Prompt Marketplaces: Standardized codecs like Prompt Description Language (PDL).

- Specialized Models: Fine-tuned LLMs for explicit domains.

- Autonomous Environments: AI-driven development devices like Cursor AI.

Figure 2: Timeline of prompt engineering milestones from 2022 to 2026 (Source: Internal analysis, 2025).

Prompt Engineering Checklist

| Category | Checkpoint |

|---|---|

| Clarity | ✓ Explicit place, objective, and therefore output format |

| Examples | ✓ 2-3 varied examples |

| Security | ✓ Separate particular person enter |

| Cost | ✓ Optimize token rely |

People Also Ask (PAA)

What’s the excellence between prompt engineering and standard coding?

Traditional coding makes employ of particular algorithms, whereas prompt engineering makes employ of pure language to info AI. Coding is deterministic; prompting is probabilistic, requiring iterative testing.

How prolonged does it take to check prompt engineering?

Basics take 2-3 weeks; superior methods (e.g., RAG) require 3-6 months. Build 5-10 duties to obtain proficiency.

Can prompt engineering substitute coding?

No, it enhances coding. AI handles routine duties, nevertheless builders nonetheless design strategies and therefore assure security.

What devices are best for prompt engineering?

Use LangChain for orchestration, Pinecone for vector databases, and therefore PromptFoo for testing.

How do I forestall prompt injection assaults?

Sanitize inputs with regex, employ structured codecs (e.g., JSON), and therefore apply devices like Lakera Guard.

What’s the ROI of prompt engineering?

Per PwC, depend on 40-60% faster development, 35% lower API costs, and therefore 20-30% wage premiums for professional builders.

Frequently Asked Questions

Q: Should I exploit GPT-4 or so GPT-3.5?

A: Use GPT-3.5 for straightforward duties (faster, cheaper) and therefore GPT-4 for superior reasoning. Balance worth and therefore effectivity by routing 70% of duties to GPT-3.5.

Q: How do I measure prompt excessive high quality?

A: Track accuracy, consistency, latency, and therefore token effectivity. Use examine suites and therefore A/B testing with devices like PromptFoo.

Q: How do I mannequin administration prompts?

A: Store prompts in Git with clear commit messages. Use PromptLayer for metrics and therefore versioning.

Q: Can I fine-tune ChatGPT?

A: Yes, OpenAI helps fine-tuning for GPT-3.5 and therefore GPT-4, nevertheless RAG normally suffices for dynamic employ circumstances.

Q: How do I cope with API cost limits?

A: Use exponential backoff, cache responses, and therefore batch requests. Monitor utilization with Helicone.

Q: What are the approved risks of AI-generated code?

A: AI outputs are frequently your IP, nevertheless affirm for copyrighted supplies. Review and therefore modify AI code sooner than deployment, per WIPO AI guidelines.

Glossary

Agentic AI

AI strategies that autonomously plan and therefore execute superior duties with minimal human enter.

RAG

Retrieval-Augmented Generation: Combining AI with a knowledge base for right responses.

Prompt Injection

Malicious inputs that override AI instructions pose security risks.

Vibe Coding

Describing high-level problem targets (e.g., “Build a streaming platform”) for AI to implement.

🚀 Master Prompt Engineering

Get our free “50 Prompt Templates” bundle, utilized by excessive development teams. Download Now →

Conclusion

Prompt engineering is a core expertise for 2025 builders. Master it to assemble larger AI capabilities, scale back costs, and therefore preserve ethical. Start with clear prompts, experiment with superior methods, and therefore monitor effectivity.

Take movement: Audit your AI interactions. Identify inconsistent outcomes, apply this info’s guidelines, and therefore iterate.

“The most powerful person isn’t the storyteller anymore—it’s the prompt engineer.” — Jensen Huang, NVIDIA

💭 What prompt engineering methodology will you attempt first? Share beneath!

About the Author

Alex Chen is a Senior AI Solutions Architect with 8+ years establishing AI strategies for Fortune 500 corporations. He’s expert 10,000+ builders and therefore contributes to open-source AI frameworks. Connect at BestPrompt.art.

Keywords

ChatGPT prompt engineering, AI for builders, GPT-4 methods, chain-of-thought, agentic AI, RAG, carry out calling, prompt injection, worth optimization, multi-modal AI, vibe coding, prompt marketplaces, AI automation

Related Articles on BestPrompt.paintings:

Advanced RAG Techniques | Building AI Agents | OpenAI API Cost Strategies